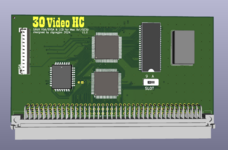

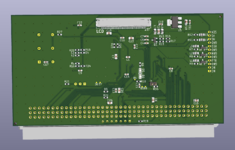

Primary focus is exposing the LCD interface, with the intent being to drive the 8.4" 640x480 LCD shown above. This is to support those who want to swap the CRT for an LCD. Only my first prototype cards had the interface present, but the connector/pinout wasn't optimized for noise (or anything, really) so it has some issues as seen above when on an extended cable.

Secondary focus is the 16 bit color: this design has a CPLD to perform byteswapping of data lines which is required in 16 bit, the epson chip always wants little endian in memory despite supporting and respecting endianness on the bus interface. As a side effect, now that the CPLD has the data bus going through it, there's a possibility I could find a purpose in making it addressable (act as a peripheral interface?). If nothing else it certainly could be used to drive a programmable clock generator.

The existing cards require "fixing" the 16 bit mode in software. The approach I've been using requires shadowing VRAM and copying pixels to the actual display buffer while transforming them, which isn't a fast process to begin with, and it's further complicated in that there is no way to be informed that a region of the screen requires updates. The best "reliable" approach I've found is something like MiniVNC does which is to hash each row of the screen buffer to determine if it's changed. Except, at that point, it's about as expensive in CPU time to just swap the bytes anyways...

This is roughly what the software approach looks like.

The cursor trails can be eliminated, at least, but the slow refresh speed remains as there's a direct relationship to refresh speed vs. amount of CPU time available for OS/applications. Pretty awful, and a terrible user experience if someone clicks "Thousands" and expects it to work like the lower depth modes.

68kmla.org