I've been vaguely following this for a while, and I have to admit I'm still sort of stuck questioning the viability of doing the emulation in software on top of Linux, at least with any level of performance. Essentially what you're building here is an in-circuit emulator for a Motorola 68000-family chip, and while I guess I see you have abandoned the idea of literally bit-banging the individual lines with a Linux process I do wonder exactly how much "administrivia" you intend to have to have the main CPU to do behind the FPGAs you're adding on. General-purpose Linux is sort of terrible at real-time work and according to the couple of white papers I've googled up even RT Linux typically has thread-switching latency in the "tens of (edit) microseconds" ballpark. (By comparison it looks like a memory read cycle on an 8mhz 68000 takes about... 290 nanoseconds?) Seems to me those FPGAs are going to have to be doing a *lot* of work to abstract the bus so the CPU won't be spending massive amounts of time thrashing about watching for handshaking signals, etc.

Also, practically speaking, once you have this CPU bus glue how do you intend to structure the communication not only between the motherboard and the emulator, but *generally*? I mean, when a user is running a MacOS program on this setup will the code still reside in the memory on the motherboard and for every word executed the emulator will be hitting this bus bridge, pulling the instructions (presumably into a cache), hopefully executing it faster than the original CPU would, and writing any changed results back to the board (will the cache always be write-through to ensure you don't get tripped by self-modifying code, or are you going to try to be smarter about it?)? Or is your intention to use the Mac's motherboard *just* for I/O and the MacOS container is going to reside entirely on the onboard RAM on your little Linux core, with *Linux itself* handling all the I/O through the bus bridge? It's an important distinction, because I seriously doubt you're going to see much in the way of a performance boost unless you do the latter, and doing the latter is going to mean you're going to have to, in essence, create Linux drivers for every hunk of hardware in the "Zombiefied" Mac (a Linux computer ate its brain), which is going to be really entertaining for picky timing-critical pieces like the IWM.

Also have to admit I'm sort of skeptical about your performance estimates for how fast of a Motorola 68000 emulator you can do on your ARM SoC, but if you have some code you're just waiting to demo I'd be happy to be proved wrong. So far as I'm aware there's no JIT for BasiliskII on ARM yet but apparently there's some JIT support in UAE. Don't see anyone saying it's way faster than a 68060, though.

To be clear, if you're sure that you've got all these issues worked out then by all means don't let me stop you. I guess I'm just vaguely wondering if there's some precedent to a project like this? I mean, I've seen the Vampire accelerator, and there's also a project out there that adds features (and I *think* some level of acceleration but I could be wrong about that, I'm too lazy to find the links right now) to 6502-based computers via an FPGA packaged in board that fits a 40 pin DIP socket, but I don't believe I've seen anything that tries to use a general purpose CPU to try to replace(*), let alone accelerate, a system.

Let me explain how I will structure the software. This should address the concerns you have raised, Gorgonops.

The emulator engine itself should be a chunk of C code with few, if any, external dependencies, in terms of libraries and the OS it must run on.

Of course, the entire RAM and ROM of the Macintosh should be cached by the emulator. RAM writes don’t even need to be written back to the Mac except in the case of VRAM/video region writes. (Therefore other DMA devices on the PDS bus are not supported.)

The emulator can keep a big table, the “address space table,” of 4kbyte pages of the entire 4Gbyte memory space of the Macintosh (can maintain different tables per function code as well). These tables will be a bit big, 1M x (size of entry), but that’s okay when you have 1G of RAM. Each entry in the address space table will tell how to access the given page of memory. So in changing the address space table, you can change whether an access by the emulator to a certain region of memory results in an access of the RAM cache, ROM cache, or an actual bus access (such as for VRAM or an I/O device). (The translator software itself can also use this table to store flags about the translation status of a page, but I have determined it would be better to use a different type of structure.)

The emulator engine must be statically linked to a certain front-end in order to actually do anything. (I specify static linking because some external segment loader or whatever must do the dynamic linking, and I want to avoid any external dependencies. No need for dynamic linking.) So I plan for two front-ends.

A desktop front-end would support debug and test of the emulator engine in one of the typical Linux desktop environments.

An in-circuit emulator (ICE) front-end would support use of the emulator as an accelerator or coprocessor in an existing M68k system like the Macintosh.

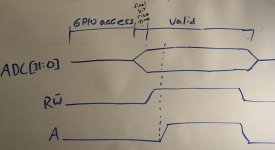

The ICE front-end would be constructed as a kernel module, in order to eliminate the context-switch overhead. Now, I have totally eliminated any operation of the M68k bus by the Snapdragon itself. The Snapdragon talks to 3 FPGAs over the multiplexed, 32-bit-wide “FPGA bus,” and then they coordinate among themselves the M68k bus access. (Enabling this was my discovery of the very affordable MachXO FPGA family.) Therefore, I have made the FPGA-Snapdragon bus design fully asynchronous. So you can, from userspace, operate the FPGA bus and command an M68k bus operation, but it would be slow, and you probably can't operate the IWM, as you say Gorgonops. So for practical purposes, it has to be done from a kernel module.

Now, there still is a bit of a bandwidth problem, but maybe not the one you would expect. The Snapdragon 410 has 122 GPIO pins and 122 GPIO pin registers. What I mean is that you can’t do a 32-bit-wide write to a register and set 32 GPIOs at once. No, you have to do 32 separate accesses. To command a bus access for a 68020+ system, some 32 pins need to be set 3 times each, plus a few control signals. So that’s 100 memory operations, plus some waiting because of rise/fall times. I dunno how fast the Snapdragon’s GPIO I/O interconnect is, but these 100 writes to I/O memory couldn’t take more than a half of a microsecond.

By the way, it’s about 1 microsecond per word access on Mac Plus, average of 3/4 us (I think) on SE, and 1/4 us or 3/16 us on SE/30 (don’t know if the glue logic imposes a wait-state).

So in conclusion, the main hit will be in I/O performance to the peripherals and video memory, but I don’t think it’ll be too bad, since everything else will be faster. Write operations can also be sort of pipelined to improve performance, which will benefit video writes. Video reads can come from a cache in the Snapdragon's memory. So the worst case is that the I/O performance of an SE/30 or IIci will be as good as a Mac SE. But again, I think I can make it faster than that by pipelining the write operations.

On the topic of using this to add all sorts of enhanced features, including web access via the Linux you've put on board, well, I'm going to toss this out there:

Have you by any chance heard of the Apple II Pi? It's a devious bundle of software that lets you take a Raspberry Pi, cable it up to an Apple II (

or in fact plug it into a slot using a little interposer card that's just a really brain-dead serial port), and boot up a software disk which redirects all keyboard/joystick/mouse I/O to the Pi and lets them act as those peripherals for the Linux machine. It even allows access to the floppy drives attached to the host so disks can be read and used for the Apple IIgs emulator that runs on the Pi side, so overall it makes the setup look like a massively expanded Apple IIgs (IE, 15MB of RAM, hard disks, network card, the works). The illusion is so convincing that many of the people who've bought the serial card are convinced that it must be doing a lot more with their Apple II than it really does. So in that vein: every Macintosh has two high-speed serial ports built into it, have you considered the idea of just interfacing your parasite Linux computer to that and creating a little extension that turns the Mac into an I/O slave? For every Mac that uses an external monitor the job is pretty much done at that point; just pair up with an HDMI to VGA adapter if you want to drive the original monitor and you're good to go.

For the toaster macs

there are various ways to drive the original CRT from a modern ARM SoC; the link shows a sort of software-intensive way of doing it but I'm relatively sure I've seen other methods that can leverage LCD interfaces or even HDMI to do it with less overhead. How about for those you just put an interposer board between the analog board plug and the motherboard that can switch on a software command between the motherboard output and the video generated by your "accelerator"? Connectivity to the host Mac would consist of said connector and a little plug that snaked out of the case and plugged into one of the serial ports. On bootup the extension to slave-ify the Mac would load, it'd establish handshaking with the Linux computer (which itself might need some time to boot), and then the screen goes *blink* briefly as control is handed off. For all practical purposes you've accomplished the same thing but you don't have to target the CPU socket.

That stuff is cool but it's not really the direction I want to go in. My aim is to make the Maccelerator a goal so I can personally learn something. The added benefit is that there is something tangible to show off, so maybe you can kinda make the same effect by taking over the screen and I/O, but that's not my aim.

Let me say a little more about the performance.

I think 50x faster than SE for register-register operations is easy. Do I need to say any more? You just write the shortest ARMv8-A algorithm in assembly to implement the given 68000 instruction. Even if it takes 10 instructions, the Snapdragon's Cortex-A53 runs at 1.2 GHz, with a 533 MHz memory bus, and can dispatch multiple instructions in one clock. 68030 takes at least two clocks to execute an instruction, and it has an unsophisticated instruction cache compared to the Cortex-A53's cache system.

Instructions which access memory will be harder to get to be 50x faster, but I'm sure its doable when the emulator runs as a kernel module, basically hogging a whole core.

So I am ignoring the speed at which the chipset can be accessed in this speed analysis, but I don't think it will be a huge deal, especially if write operations can be pipelined to accelerate video writes.