Curious, since it has pretty much the same graphics chip. So, it could be that the PPC 603 cache stalling issue you've been looking at makes it that 16% slower. OTOH, because it's testing QD graphics routines (isn't it?), then lots of small graphics operations will have a higher 68K overhead for ROM routines that use 68K code too (I know QD was converted to PPC, but I understand not 100% of all graphics routines).OK this was interesting... Quadra 630 beats my 5200 on speedometer 8 bit graphics! I ran the test several times, the 8-bit graphics is actually quite a bit faster in Mac OS 7.5.1. versus Mac OS 8.5.1 (but 16-bit is the same).

Machine 8-bit graphics 16-bit graphics Quadra 630 1.13 - Mac OS 7.5.5 5200 0.95 0.71 Mac OS 7.5.1 5200 0.81 0.71 Mac OS 8.5.1

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

How did the PowerPC 603 / 5200 at 75mhz compare to PC:s (486/Pentium)?

- Thread starter noglin

- Start date

It is exactly the same chip!Curious, since it has pretty much the same graphics chip.

Although in comparison to the 68040, the 68040 would also stall?So, it could be that the PPC 603 cache stalling issue you've been looking at makes it that 16% slower.

The 68k code *could* have been an explanation, I checked by entering MacsBug while it was running on Mac OS 7.5.1, and NQDMapRegion was active each time I entered and was in PPC code. So the difference between Q630 and 5200 is unlikely to be Quickdraw itself.OTOH, because it's testing QD graphics routines (isn't it?), then lots of small graphics operations will have a higher 68K overhead for ROM routines that use 68K code too (I know QD was converted to PPC, but I understand not 100% of all graphics routines).

Also, the 601 CPU with the Civic chipset beats Valkyrie by quite a lot: https://68kmla.org/bb/index.php?attachments/2025-06-29-154426_799x854_scrot-png.88203/

If 68040 also stalls during stores... and it is native code, and the video chip is the same.. what else could it be? Bus overhead?

Or did the 68040 handle cache inhibited stores in a way that it would not stall for very long? (I do not know the 68k cpu)

The original 603 probably could've gotten away with 16kB of cache if it was unified. Having it split into two 8kB sections is really what hampered it, especially with the 68LC040 Emulator which some resources suggest could run more efficiently with a minimum of 16kB (whether unified 16kB or 16+16kB I/D caches). The 601 was the only PPC chip with a unified cache for some reason (32kB). I honestly don't know what they thought they were going to do with such a small split cache on the 603.

Basically the non-PCI-based 5/6xxx series was a Q630 with a built-in PPC upgrade, so it performed about the same (though the original PPC Upgrade Card for the Q630 used a 601 so it was faster than a 603).

Crucially, the 603 operated in 32-bit bus mode, not 64-bit as in the proper NuBus and PCI models, which definitely reduced performance (same as the PB5300/Duo 2300 series which was basically the same: a re-cased PB5x0/Duo 280 with a built-in PPC upgrade). Fortunately the later 53/63xx models stepped up to a 603e, so they were less unpleasant to use than their predecessors. However, the later 53/63xx models no longer made the L2 cache standard and the cache modules are really difficult to find since they're incompatible with any other Mac model.

Anyway, the 5/6xxx's architecture meant that not only was the CPU's bus width cut in half, but everything except the ROM and L2 cache was behind Capella, which added a performance penalty (how much of one I couldn't say but I'd wager it'd be at least 2 clock cycles per transaction).

This was finally addressed in the Alchemy machines where the PPC ran natively on its own bus at 64 bits (though for some reason Apple decided to graft the old 040-based Valkyrie graphics core onto the PPC bus and call it the "Valkyrie AR" with basically no other improvements - why not just save the effort and use a modern graphics chip on PCI instead? It's not like they couldn't have just used something from S3, Trident, or C&T (PowerBooks used C&T graphics chips for years). Apple just really, really liked wasting resources on custom ICs for some reason).

Some clones actually skipped the Apple video option on their implementations of Alchemy and deleted the Valkyrie AR in favor of either a built-in ATI solution or an add-in video card. Apple finally saw reason with the Gazelle/Tanzania boards and used ATI graphics onboard instead of yet another lackluster custom solution.

Basically the non-PCI-based 5/6xxx series was a Q630 with a built-in PPC upgrade, so it performed about the same (though the original PPC Upgrade Card for the Q630 used a 601 so it was faster than a 603).

Crucially, the 603 operated in 32-bit bus mode, not 64-bit as in the proper NuBus and PCI models, which definitely reduced performance (same as the PB5300/Duo 2300 series which was basically the same: a re-cased PB5x0/Duo 280 with a built-in PPC upgrade). Fortunately the later 53/63xx models stepped up to a 603e, so they were less unpleasant to use than their predecessors. However, the later 53/63xx models no longer made the L2 cache standard and the cache modules are really difficult to find since they're incompatible with any other Mac model.

Anyway, the 5/6xxx's architecture meant that not only was the CPU's bus width cut in half, but everything except the ROM and L2 cache was behind Capella, which added a performance penalty (how much of one I couldn't say but I'd wager it'd be at least 2 clock cycles per transaction).

This was finally addressed in the Alchemy machines where the PPC ran natively on its own bus at 64 bits (though for some reason Apple decided to graft the old 040-based Valkyrie graphics core onto the PPC bus and call it the "Valkyrie AR" with basically no other improvements - why not just save the effort and use a modern graphics chip on PCI instead? It's not like they couldn't have just used something from S3, Trident, or C&T (PowerBooks used C&T graphics chips for years). Apple just really, really liked wasting resources on custom ICs for some reason).

Some clones actually skipped the Apple video option on their implementations of Alchemy and deleted the Valkyrie AR in favor of either a built-in ATI solution or an add-in video card. Apple finally saw reason with the Gazelle/Tanzania boards and used ATI graphics onboard instead of yet another lackluster custom solution.

Many chips 1994/1995 had 8kb split. I don't think that was the issue. Both first two versions of the Pentium chip had split 8kb/8kb. If I had the choice for my programs, I would prefer 8kb split over 16kb unified.The original 603 probably could've gotten away with 16kB of cache if it was unified. Having it split into two 8kB sections is really what hampered it, especially with the 68LC040 Emulator which some resources suggest could run more efficiently with a minimum of 16kB (whether unified 16kB or 16+16kB I/D caches). The 601 was the only PPC chip with a unified cache for some reason (32kB). I honestly don't know what they thought they were going to do with such a small split cache on the 603.

Basically the non-PCI-based 5/6xxx series was a Q630 with a built-in PPC upgrade, so it performed about the same (though the original PPC Upgrade Card for the Q630 used a 601 so it was faster than a 603).

Crucially, the 603 operated in 32-bit bus mode, not 64-bit as in the proper NuBus and PCI models, which definitely reduced performance (same as the PB5300/Duo 2300 series which was basically the same: a re-cased PB5x0/Duo 280 with a built-in PPC upgrade). Fortunately the later 53/63xx models stepped up to a 603e, so they were less unpleasant to use than their predecessors. However, the later 53/63xx models no longer made the L2 cache standard and the cache modules are really difficult to find since they're incompatible with any other Mac model.

Anyway, the 5/6xxx's architecture meant that not only was the CPU's bus width cut in half, but everything except the ROM and L2 cache was behind Capella, which added a performance penalty (how much of one I couldn't say but I'd wager it'd be at least 2 clock cycles per transaction).

Unfortunately some article on low-end mac has convinced many that the 603 itself runs in 32-bit mode but this is simply not true.

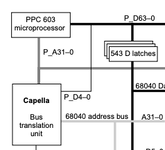

The 603 in the 5200/6200 is operating at 64-bit. The L1 (which is on-chip) has 64-bit connection to the FPU and all cache operations are in 64-bit, this is the same as the 603e. On the 5200/6200 the 603 cpu is operating at 2:1, i.e. 75 MHz CPU and 37.5 MHz 64-bit bus.

Apple connected ROM and L2 directly to the 64-bit bus, so these are operating at 64 bit.

The rest is true though: RAM, IO and Valkyrie video chip is connected to a 68040 bus, which Capella translates between 68040 32-bit bus and the PPC 64-bit bus. The performance impact of the translation itself, I don't know. For IO it may not be that much if the IO devices themselves are the bottleneck.

I suppose the 68040 bus ran at 37.5MHz? For RAM and Valkyrie videochip, probably the best case is 2x slower than if it had been on a 64-bit bus?

Yeah this is an interesting question, why did they even bother making their own custom video chipsets? Was it cheaper in total somehow?This was finally addressed in the Alchemy machines where the PPC ran natively on its own bus at 64 bits (though for some reason Apple decided to graft the old 040-based Valkyrie graphics core onto the PPC bus and call it the "Valkyrie AR" with basically no other improvements - why not just save the effort and use a modern graphics chip on PCI instead? It's not like they couldn't have just used something from S3, Trident, or C&T (PowerBooks used C&T graphics chips for years). Apple just really, really liked wasting resources on custom ICs for some reason).

While the 5400 developer notes has pretty much a copy paste of the original Valkyrie description, The Valkyrie AR is, at least by the ERS, quite different from the original Valkyrie. The framebuffer has two pages, which is a game changer (you can draw to one buffer and flip when ready, so no more tearing when doing 640x480). It has 12 buffer write entries in contrast to 4, *and* supports burst writes, that is very likely another game changer (likely means no more stalling the CPU). It also seems to have a register for changing the base address, which also helps for e.g. fast scrolling without moving data.

Some clones actually skipped the Apple video option on their implementations of Alchemy and deleted the Valkyrie AR in favor of either a built-in ATI solution or an add-in video card. Apple finally saw reason with the Gazelle/Tanzania boards and used ATI graphics onboard instead of yet another lackluster custom solution.

The papers @noglin linked to earlier explains the design decision for the split I/D 8kB cache. For normal software it really is better than 16kB unified. The 603 team anticipated a 100% native PPC Mac OS from the start, because Tesseract was the primary PPC Mac project until March 1993 when it failed and PDM replaced it. First 603 silicon was just 6 months later, so the design was already so far advanced that a unified 16kB cache (or 16kB + 16kB I/D caches) wouldn't have been possible.<snip> 603 <snip> 16kB of cache if it was unified. <snip> two 8kB sections is really what hampered it

My guess is that the early 1990s RS/6000s had a unified cache. The PPC 601 was essentially a 9-chip, RS/6000 squeezed into a single CPU.<snip> The 601 was the only PPC chip with a unified cache for some reason (32kB).<snip>

Credible. Correction, not merely credible but a good insight. If I were Apple and I wanted to create a P5200/6200 prototype, the easiest way would be to start with a 630 and add a PPC601 upgrade until the PPC603 arrived. And in the meantime, sell the PPC upgrade for the Q630.<snip> 5/6xxx series was a Q630 with a built-in PPC upgrade, so it performed about the same<snip>

It had a dual bus. The CPU+L2 Cache + ROM was all on a 64-bit bus while RAM, Video and I/O were on the 32-bit bus.Crucially, the 603 operated in 32-bit bus mode, not 64-bit<snip>

You might mean that the ROM and L2 were on a 64-bit bus, but it sounds like you're saying they were on a 32-bit bus, and then Capella crippled data access even further (by adding latency, and being a slower 68040-based bus).Anyway, the 5/6xxx's architecture meant that not only was the CPU's bus width cut in half, but everything except the ROM and L2 cache was behind Capella,

I think, from (my poor) memory, Capella could still handle Fast Page mode transactions. So, the first access was 3 cycles (RAS, CAS, data), but a few subsequent ones were 2 cycles.which added a performance penalty (how much of one I couldn't say but I'd wager it'd be at least 2 clock cycles per transaction).

Last edited:

It was a huge improvement over the 601 in terms of cost and energy efficiency. But in raw power per mhz it seems to do a bit worse than the 601. Example: 603 is 1.6M transistors, the 601 is 2.6M, Pentium is 3.3M, and the cost to produce scales roughly with that. The 603 can run in the 5200 without a fan, does not even need a heatsink. While even the Pentium P75 has a fan on it.So I'm gathering from this is the 603 wasn't much of an improvement over the 601.

And the Pentium has its legacy for a reason: while it had some flaws, it was quite a good CPU.

Honestly, from what I'm gathering from nbench and spec, the 603 is a fine CPU for most workloads.

I also watched some videos of Win95/Win3.11, and I think, apart from graphics it looks/feels about the same as Mac OS 7.5 (Mac OS 8.6/Mac OS 9 starts to feel way slower on the 5200 though).

For graphics and games, the 5200 is quite poor. I have been able to push it quite a lot, but it requires knowing the CPU and valkyrie limitation inside out and hand crafting assembler code for it. Definitely not something you would get even with quite decent C code and all compiler optimizations on, simply because the 603 stalls badly on cache misses and even worse on vram writes.

I had started to gather various data to get a more holistic view on it and put it here, the Summary/overview is a bit lacking as it is hard to find numbers for all to compare:

Performa 5200 vs PC/Amiga

Interesting. So what was a good CPU back then, let's start from the 6502 and end it at the Core 2 Duo timeframe, which was "Best in class" i.e. "the one that was least bad"?

I mean they all kind of did their purpose in their time. A 68040 is quite noticeably faster than a 68030 at the same MHz, nobody is disputing that, but I'm sure some kind of relative scale would be nice.

Of course, these CPU arguments have been hashed, hashed again, rehashed, gone back over again, repeated at least 6 times, for the better part of 40 years. But some things don't really matter all that much either: "The ALU isn't as efficient as ____ but realistically it didn't really change the overall progression either".

I mean they all kind of did their purpose in their time. A 68040 is quite noticeably faster than a 68030 at the same MHz, nobody is disputing that, but I'm sure some kind of relative scale would be nice.

Of course, these CPU arguments have been hashed, hashed again, rehashed, gone back over again, repeated at least 6 times, for the better part of 40 years. But some things don't really matter all that much either: "The ALU isn't as efficient as ____ but realistically it didn't really change the overall progression either".

It has actually been quite a bit of research just to compare the 603 versus CPU:s from its timeline: 1994-1995 (and possibly 1993-1996)Interesting. So what was a good CPU back then, let's start from the 6502 and end it at the Core 2 Duo timeframe, which was "Best in class" i.e. "the one that was least bad"?

Starting from 6502 to Core 2 Duo would either not go very deep, or be quite an undertaking. Probably best to start another thread for some other "era" of processors. And also, this thread was originally for comparing the 5200 (which means not only the CPU, but the whole system), against the typical "competitors" at that time, e.g. 486 DX2/DX4 system and Pentium 75 (and similar Macs, like the 6100).

The specint92/specfp92 gives at least a somewhat fair evaluation of the CPU (as it was an industry standard, and important, you can expect all results to be somewhat "as best as it can get" from the vendor, whereas nbench has results reported with various compilers and flags that may reflect compiler settings more than the cpu).

But the spec92 is still very CPU focused, it won't tell you much how the system would behave in terms of graphics. For that I think Doom or Quake are good complements.

As for "what was a good CPU" for 1994-1996, I think if you only care about "raw power", it would have to be the 604 and Pentium (P54C). But the 603e at a higher clock rate was still good. The 603 should probably be seen as "won over 486 but lost against Pentium" (imho), while the 603e can win over the Pentium at a higher clock rate.

What would you propose the relative scale should beI mean they all kind of did their purpose in their time. A 68040 is quite noticeably faster than a 68030 at the same MHz, nobody is disputing that, but I'm sure some kind of relative scale would be nice.

Some manner of... Processor Rating? The PowerPC 603e PR233. (joking)What would you propose the relative scale should be

SpecInt92 and SpecFP92 (as well as Spec89) were measured against the VAX 11/780 which was defined to have a performance of 1 MIP (though in fact it was less than that). And for those not familiar, the VAX 11/780 was an early 32-bit Super-minicomputer developed at the end of the 1970s, around the same time as the Motorola 68000. Both of them are descendants of the pdp-11, except that the M68000 is more of a literal descendant with similar kinds of 16-bit instruction formats and sets of 8-registers; whereas the VAX 11/780 is more like a mutant 32-bit x64 design based on a string of 8-bit opcodes.What would you propose the relative scale should be

The lack of graphics benchmarks in SpecMark92 reflects the pre-workstation era so supplementing these kinds of tests with demanding graphics tests makes sense. We would find though that testing an entire system allows for far more variability. Consider systems that have a lower bus clock, but can support blitting and line drawing in hardware? Or even flat, Gouraud and Phong polygon filling? This describes early SGI computers; the Commodore Amiga (to some extent) and some PCs supported by an early GPU (though I forget the name of early GPUs that could do that).

That makes for some fun Top Trumps style comparisons!

And that can be fun.

However, if I was to propose a retro-scale and make it less, er, tribal, I would pick an ARMv2 at 8MHz as the baseline and an Archimedes as a system baseline. That's because almost certainly it counts as a "best-in-class" CPU, delivering far more performance per transistor or per watt than anything else I can think of from that period. And the associated chipset provided memory management, audio and video.

Early ARM CPUs (which were cacheless) had about 35K transistors making them about 50% bigger than an 8086 or nearly half the size of a 68000. They were not universally comparable to 8086 nor 68000 either, because they were extremely poor at handling 16-bit data and 16-bit aligned data (only 8-bit and 32-bit data was supported). If we like, we could skip ahead to ARMv3 which did support those data sizes.

Furthermore, the lineage of Macs converges on ARM with the appearance of Apple Silicon and ARM itself only became viable thanks to Apple. You can play with an emulator live here, it runs at the actual speed of an early ARM computer:

Archimedes Live! - Online Acorn Archimedes emulator

archi.medes.live

archi.medes.live

You can write assembly code directly in BBC BASIC (there's a 2-pass macro assembler built-in).

Hmm, but now I'm thinking it's quite hard to get standard bench tests onto it, so .. maybe not!SpecInt92 and SpecFP92 (as well as Spec89) were measured against the VAX 11/780 which was defined to have a performance of 1 MIP (though in fact it was less than that). And for those not familiar, the VAX 11/780 was an early 32-bit Super-minicomputer developed at the end of the 1970s, around the same time as the Motorola 68000. Both of them are descendants of the pdp-11, except that the M68000 is more of a literal descendant with similar kinds of 16-bit instruction formats and sets of 8-registers; whereas the VAX 11/780 is more like a mutant 32-bit x64 design based on a string of 8-bit opcodes.

The lack of graphics benchmarks in SpecMark92 reflects the pre-workstation era so supplementing these kinds of tests with demanding graphics tests makes sense. We would find though that testing an entire system allows for far more variability. Consider systems that have a lower bus clock, but can support blitting and line drawing in hardware? Or even flat, Gouraud and Phong polygon filling? This describes early SGI computers; the Commodore Amiga (to some extent) and some PCs supported by an early GPU (though I forget the name of early GPUs that could do that).

That makes for some fun Top Trumps style comparisons!

View attachment 88292

And that can be fun.

However, if I was to propose a retro-scale and make it less, er, tribal, I would pick an ARMv2 at 8MHz as the baseline and an Archimedes as a system baseline. That's because almost certainly it counts as a "best-in-class" CPU, delivering far more performance per transistor or per watt than anything else I can think of from that period. And the associated chipset provided memory management, audio and video.

Early ARM CPUs (which were cacheless) had about 35K transistors making them about 50% bigger than an 8086 or nearly half the size of a 68000. They were not universally comparable to 8086 nor 68000 either, because they were extremely poor at handling 16-bit data and 16-bit aligned data (only 8-bit and 32-bit data was supported). If we like, we could skip ahead to ARMv3 which did support those data sizes.

Furthermore, the lineage of Macs converges on ARM with the appearance of Apple Silicon and ARM itself only became viable thanks to Apple. You can play with an emulator live here, it runs at the actual speed of an early ARM computer:

Archimedes Live! - Online Acorn Archimedes emulator

archi.medes.live

You can write assembly code directly in BBC BASIC (there's a 2-pass macro assembler built-in).

The 603 has a 32-bit address bus and a selectable 32/64-bit data bus. The 603UM says that operation must be selected at boot, so it's not dynamic: you get either a 32 or 64-bit data bus. According to the Dev Note for the 5200, Capella resides on the 64-bit 603 bus along with ROM and L2 cache. So you're probably correct there, though it's confusing: the respective Dev Notes for the 5200 and 5260 (basically the same but with a faster CPU and optional L2 on the 5260) have different diagrams with possibly incorrect labels on one or the other (or both): the 5260 diagram labels the line between the CPU and ROM/cache as "PC address bus (63-0)" where the address bus can only ever be 32 bits, and regardless there's no proper data bus path between them in the diagram (though I assume it's the line labeled "PC address bus (31-0)". These Notes are about as accurate as the average Chilton manual is for cars, and these are narrow-scope first-party documents. I can understand that it's mostly just gee-whiz info for nerds here, since these systems were only ever produced by Apple and third party hardware engineers really had no reason to get that far into the weeds on these (there was no 60x bus access via expansion slots and Apple discouraged third party use of the ROM/L2 cache slot) and it was transparent and therefore mostly irrelevant to software devs, but come on.It had a dual bus. The CPU+L2 Cache + ROM was all on a 64-bit bus while RAM, Video and I/O were on the 32-bit bus.

So you can understand the confusion, especially since the PowerBook 5300/Duo 2300/PowerBook 1400 do operate only in 32-bit bus mode. Why would Apple hobble their high-end portables but not their cheap (as in, "LC" was literally in the name of some of these models) offerings? It doesn't make a lot of sense, but then, this was mid-'90s Apple; there wasn't a lot of sense to be had.

Anyway, if it does reside on a 64-bit data bus, I assume Capella uses some sort of buffering to help bridge the two different bus widths (64-bit 603 to 32-bit 040). Or maybe it only sends the 603 data 32 bits at a time on the 64-bit bus (which is permissible according to spec). Hard to say; Apple never really publishes the inner workings of their ASICs.

Part of the fun of this thread is finding the quirks in the 603 and P5200 specs. The 32-bit/64-bit hardware bus select is a good reminder though.The 603 has a <snip: PPC603, 32-bit addr bus, 32 or 64-bit data bus, hardware selectable at boot. P5200:Capella divides 64-bit/32-bit busses. Apple Dev notes on P5200/5260 are confusing, doubly so as it's intended for 1st-tier Devs.> weeds on these

Good point, it was a 68030-style PDS.(there was no 60x bus access <snip>

It's OK, I've misunderstood a few things here - at least once I've gone back & repeated a previous misunderstanding! Laptops are constrained by space and power, and a system-wide 32-bit bus would certainly save space and may also save power.So you can understand the confusion, especially since the PowerBook 5300/Duo 2300/PowerBook 1400 do operate only in 32-bit bus mode. Why would Apple hobble <snip>

Yep, it uses a bunch of 74LS543 (or 74HCT543) D-type latches.<snip> I assume Capella uses some sort of buffering to help bridge the two different bus widths<snip>

I've been confused a lot, it is hard to find primary sources and even then, they sometimes contradict each other. Also when I've been writing micro bench code to test things, I've also confused myself to the wrong conclusion at times just because it is sometimes hard to pinpoint exactly why something is the way it is.The 603 has a 32-bit address bus and a selectable 32/64-bit data bus. The 603UM says that operation must be selected at boot, so it's not dynamic: you get either a 32 or 64-bit data bus. According to the Dev Note for the 5200, Capella resides on the 64-bit 603 bus along with ROM and L2 cache. So you're probably correct there, though it's confusing: the respective Dev Notes for the 5200 and 5260 (basically the same but with a faster CPU and optional L2 on the 5260) have different diagrams with possibly incorrect labels on one or the other (or both): the 5260 diagram labels the line between the CPU and ROM/cache as "PC address bus (63-0)" where the address bus can only ever be 32 bits, and regardless there's no proper data bus path between them in the diagram (though I assume it's the line labeled "PC address bus (31-0)". These Notes are about as accurate as the average Chilton manual is for cars, and these are narrow-scope first-party documents. I can understand that it's mostly just gee-whiz info for nerds here, since these systems were only ever produced by Apple and third party hardware engineers really had no reason to get that far into the weeds on these (there was no 60x bus access via expansion slots and Apple discouraged third party use of the ROM/L2 cache slot) and it was transparent and therefore mostly irrelevant to software devs, but come on.

So you can understand the confusion, especially since the PowerBook 5300/Duo 2300/PowerBook 1400 do operate only in 32-bit bus mode. Why would Apple hobble their high-end portables but not their cheap (as in, "LC" was literally in the name of some of these models) offerings? It doesn't make a lot of sense, but then, this was mid-'90s Apple; there wasn't a lot of sense to be had.

Also in this TIL it does say ROM and L2 is 64-bit. It is of course possible that they put the wrong info in all places, but perhaps least likely. My guess is that if one read/understood the 603 bus, and the 68040 bus, then one could probably have a pretty good guess how Capella would transfer the 603 bus to the 68040. However I can't say I've really looked at either, maybe I should, perhaps there are some insights there that could help me make things run faster on the 603.Anyway, if it does reside on a 64-bit data bus, I assume Capella uses some sort of buffering to help bridge the two different bus widths (64-bit 603 to 32-bit 040). Or maybe it only sends the 603 data 32 bits at a time on the 64-bit bus (which is permissible according to spec). Hard to say; Apple never really publishes the inner workings of their ASICs.

Attachments

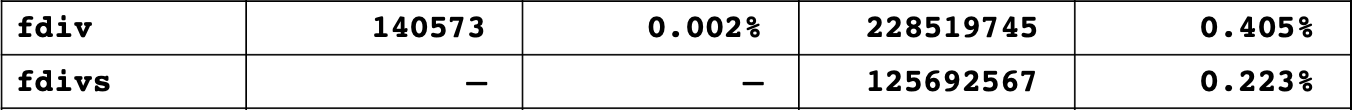

@Snial I realized this one has actually a table listing the distribution of instructions in the spec92 benchmark! And for fdivs, not a single one is used for the specint92, so the fact that fdivs stalls the integer unit for 18 cycles was never something that would have showed up by their methodology.

Attachments

I did manage to check it. My initial thought was that fdiv would only be used on SpecFP 92. From page 192:@Snial I realized this one has actually a table listing the distribution of instructions in the spec92 benchmark! And for fdivs, not a single one is used for the specint92, so the fact that fdivs stalls the integer unit for 18 cycles was never something that would have showed up by their methodology.

fdiv is used in SpecInt92 a bit, but as you say, fdivs isn't. Though both are used a decent amount in SpecFp. I guess your point is that Spec92 didn't test the interaction between integer operations and fdiv, so they assumed it would never happen?

Probably, but not certain, because of the direct-store (which the 601 had a different name for) interface.My guess is that if one read/understood the 603 bus, and the 68040 bus, then one could probably have a pretty good guess how Capella would transfer the 603 bus to the 68040

The 60x bus is more complex than a 68k bus, but not by an unreasonable amount. The main differences are

(a) 64 bits (obvious!)

(b) separate bus controls for Address and Data busses.

(c) decent support for snooping

(d) parity on data bus

(z) the direct-store interface

(a) and (b) together means a faster, more efficient bus. Address and data transfers can overlap to some extent, so the bus can be pipelined somewhat. It can even potentially be out-of-order in SMP systems with a suitable external controller, and SMP support is helped by the snooping (c). (d) is optional and help with reliability (don't think any PPC with 60x ever used parity?).

For a single-CPU bus, it can be implemented in a not -too-complicated way by ignoring the snooping stuff and optionally the pipelining. It does use a lot more signals but that's about it. There's some CPU-specific behavior as well, the most interesting of which is that the 603 (and 603e) can use their data bus as a 32-bits bus, which the 601 and 604 can't do.

And then there is (z), the direct-store interface (sigh). The good news is, it was dropped from the PowerPC in later variants and isn't available on e.g. the 603e. The bad news is it could have been used on previous systems, I don't know (I suspect they didn't and it was there just to make IBM's life easier). Short version: the MMU can flag some addresses as direct-store segment, and accesses (load/store) to those addresses use the direct-store interface, which redefine many control and the address signals and use a different bus protocol.

LOL, that is quite funny. I'd say the worst Mac I ever owned was a toss-up between my PB5300/100 or Duo 230 (both black and white). Both had awful screens, but the D230 had a frustrating mini trackball that I'd always be having to clean out after getting clogged up, and I only had a full-sized dock, which took up far too much space. If'd had a minidock I would have kept it and my PM4400 probably until around 2002, where I'd be at the point where I'd need Wifi and Mac OS X.Not a helpful contribution but the 5200 was the worst computer and or Mac I have ever owned. What a piece of crap.

However, the first PowerMac I ever used was a P5200 while I was house-sitting for a family and for me it was a revelation compared with my LC II. I loved the swivel screen/ main unit too. The P5200 gets a bad rap, but many people have found it to be less worse than it's Road Apple designation:

https://www.taylordesign.net/classic-macintosh/the-mythical-road-apple/

Anyway, this thread is about try to exploit the 75MHz PPC603's relatively meagre performance vs Pentium 75 PCs, which as it turns out, were better (he writes, trying not to vom).

Thanks for the info on the bus protocol. I don't think we've looked into those aspects much (except to consider critical word first on cache refills).<snip> direct-store <snip> separate bus controls for Address and Data <snip> snooping <snip> overlap to some extent, so the bus can be pipelined <snip> out-of-order in SMP systems <snip> 603 (and 603e) can use their data bus as a 32-bits bus <snip>

Also interesting. I knew IBM compromised with Moto on the PowerPC by giving it the M88K bus interface, but I guess they also snuck in some of their own features too.direct-store <snip> it was there just to make IBM's life easier <snip> different bus protocol.

Similar threads

- Replies

- 35

- Views

- 10K