I was curious so I looked and the dnet statistics server is still up:

cgi.distributed.net/speed/

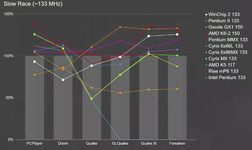

You can pull up graphs like this:

View attachment 87987

The thruput is fairly linear vs clock speed, and the slope of the lines shows the efficiency of the chip. For this thread, here are some specific results I looked up for some tuned assembly code:

Code:rc564 MHZ PowerPC 603e 100 310,190 scaled to 75 232,000 Intel Pentium (P5) 75 96,326 Intel 486dx4 75 71,124 ogr PowerPC 603e 200 1,524,307 scaled to 75 571,000 Intel Pentium (P5) 75 277,653 Intel 486dx4 100 252,230 rc572 PowerPC 603e 240 793,275 scaled to 75 248,000 Intel Pentium (P5) 75 76,413 Intel 486dx4 100 65,739

This is an interesting source. Thanks!

The 603e should not be confused with the 603. While they are very similar, they are different enough that they should be treated separately:

- 603e: stores are faster (2x throughput)

- 603e: the SRU can do some integer work, so is "~2 ALU" (like the 68060 and the Pentium, can dual issue and dual complete 2 ints subject to pairing requirements).

- L1 is twice as big on 603e (4-way instead of 2-way), unlikely to matter for this test though.

The 603 has entries for rc564 and ogr (only 1 datapoint, but all others have low stddev so ok)

As for this as a benchmark, this workload measures instead only the ALU (no fpu work at all), and won't exercise the cache and LSU that much since I presume most will be linear accesses, in one tight inner loop that mostly will do bit shifting work (for which the PowerPC rlwmn and rlwimi are a great fit).

I wouldn't expect them to be representative of daily or typical usage. They are representative of "how good can this CPU do on this exact problem" But since the problem is essentially one inner loop, it does not exercise the system very broadly.

| PowerPC 603e | 100 | rc564 | 310190.14 | 13,672.54 | 4.41 | 7 |

| PowerPC 603e ** scaled to 75 mhz | 75 | rc564 | 232642.61 | |||

| Intel Pentium (P5) | 133 | rc564 | 181776.30 | 12,626.97 | 6.95 | 71 |

| Motorola 68060 | 50 | rc564 | 140113.75 | 15,970.69 | 11.40 | 12 |

| PowerPC 603 | 75 | rc564 | 135136.50 | 3,250.26 | 2.41 | 4 |

| PowerPC 601 | 60 | rc564 | 105,464.20 | 1,443.11 | 1.37 | 5 |

| Intel Pentium (P5) | 75 | rc564 | 96,326.73 | 6,379.38 | 6.62 | 15 |

| Intel 486dx2 | 66 | rc564 | 63,815.65 | 4,806.73 | 7.53 | 26 |

I would have gladly swapped my 603 75 MHz for a P133 back in the day

| PowerPC 603e | 100 | ogr | 738631 | 0,00 | 0,00 | 1 |

| PowerPC 603e ** scaled to 75 | 75 | ogr | 553973 | |||

| Intel Pentium (P5) | 133 | ogr | 535293 | 24.375,21 | 4,55 | 21 |

| PowerPC 603 | 75 | ogr | 341123 | 0,00 | 0,00 | 1 |

| Motorola 68060 | 50 | ogr | 315917 | 27.017,24 | 8,55 | 12 |

| PowerPC 601 | 66 | ogr | 314784 | 0.00 | 0.00 | 1 |

| PowerPC 601 ** scaled to 60 | 60 | ogr | 286167 | |||

| Intel Pentium (P5) | 75 | ogr | 277653 | 23.432,00 | 8,44 | 2 |

| Intel 486dx2 | 50 | ogr | 142276 | 0,00 | 0,00 | 1 |

Although I don't feel I was convinced, I appreciate the additional data point we got here. It definitely shows that the PowerPC, and the 603 for some problems definitely can beat intel.

I think @Snial mentioned it earlier in the thread (hope I don'r recall wrong) that he suspected the PowerPC was designed using only a small set of example problems, and I think that is why it does relatively well on specint92, but then in real life it just has a lot of trouble.

I've done some micro benchmarking of the 603 as well and things like the fact that it stalls LSU completely on cache misses which easily leads to ALU also stalling and if you deal with IO or VRAM then those cache misses take a long time to resolve. In contrast both the 68060 and intel (afaik) can actually proceed with loads if they hit cache even if a store did a cache miss. I think things like this (cache misses) that happens in daily usage all the time are completely lost on these type of very isolated "one innerloop problems". I know nothing about chip design, but I would imagine that if they had used larger more complex programs, maybe they could have let the load pass through, and also not let fpu div stall the whole cpu. Those two fixes (I have no idea how many transistors that would have eaten) would have made the 603 a much stronger CPU, and also would have made it possible to do fpu/alu work together while store cache miss is happening.