David Cook

Well-known member

Read this story to discover lies, bugs, and 68K variations.

I ran across an interesting note in Inside Macintosh Memory about the performance of NewPtrClear. It is unusual that documentation would use the word "Currently" instead of "As of System 7.1". Additionally, it is unusual that is says "large block" instead of "blocks larger than 64 bytes" or something specific.

This is a great opportunity to patch the OS to boost the speed for all programs! Or is it?

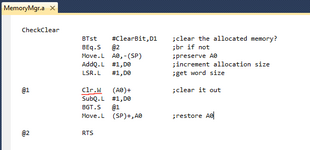

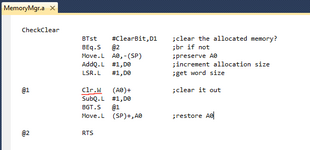

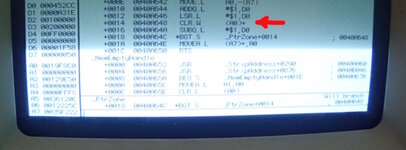

First, let's see what the ROM source code looks like. What a minute. It is clearing two bytes (Clr.W) at a time. Inside Macintosh claims "one byte" (Clr.B) at a time.

Note: Apple's code relies on the fact that handles and pointers are always allocated by the OS on even address boundaries and with an even number of bytes. The OS always returns a pointer on an even address, and if you ask for 17 bytes, you'll a receive a 17 byte pointer but the 18th byte will remain unused. This is because the 68000 CPU will crash if you attempt to write a word to an odd address. And, in Apple's code, writing only words (not bytes) means an even number of bytes will always be cleared. So, Apple's code is not safe to use for clearing arbitrary addresses and sizes -- it is only safe for this specific purpose.

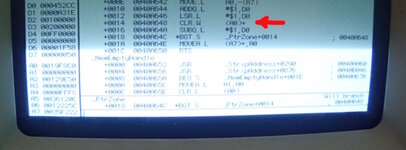

Let's allocate a large pointer on a Macintosh SE to give us time to manually interrupt into MacsBug to check if this is the actual shipping implementation. Yup. As of System 7.0.1 and the SE SuperDrive ROM, the code definitely clears a word at a time and the ROM code is being used (not an improved System routine).

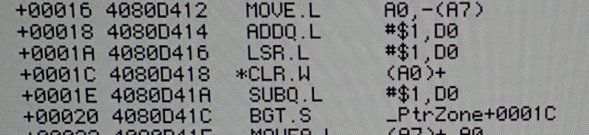

Let's check that on a IIsi with System 7.6.1. Yup. Same code. So, as early as 1989 (SE FDHD ROM) and as late as 1997 (7.6.1) Apple was clearing a word (16 bits) at a time on 68K Macs.

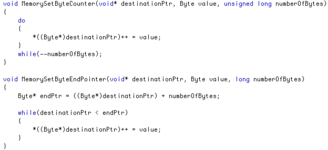

I searched online for better clearing routines in C. They follow this pattern:

1. Align the pointer to an even 4-byte address, clearing initial bytes as necessary. Aligning the address prevents crashes on some CPUs, but more importantly also writes across the entire 32-bit bus at once to all parallel memory chips. Addresses not on 4 byte boundaries split 32-bit writes into 24/8, 16/16, or 8/24. We want 32/0.

2. As much as possible, write a bunch of longs in a row (like 8) before looping to decrease the loop cost. For example, 8x4bytes=32 bytes per loop. This is called "loop unrolling".

3. As much as possible, write a single long (4 bytes) per loop with whatever remains.

4. Write a single byte per loop for the remainder.

Metrowerks code will be presented later to demonstrate this algorithm.

FYI. Most clearing code is actually based on the memset() standard C library code, where we set the value to zero.

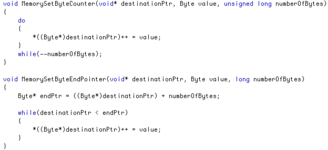

For fun, let's compare the performance of writing a single byte at a time (decrementing byte count), a single byte at a time (looking for the final pointer address), Apple's code, Metrowerks standard library code (decrementing byte count), and my own code (looking for the final pointer address).

For code speed sake, we are going to assume no nil pointers or numberOfBytes<=0. Normally we would check more carefully at the start of the routine. The major difference between the two methods below is that one decrements the number of bytes remaining and the other compares two addresses. I was raised on address comparisons.

Note: Yes, the second routine could be slightly faster with a do-while. But I just saw that and I'm not going to rerun all my tests.

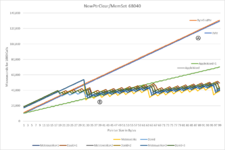

Graphs

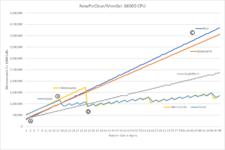

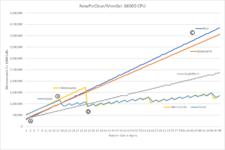

For all graphs presented, smaller values are better as the Y axis is time taken.

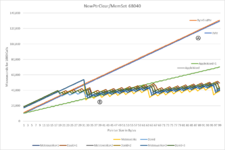

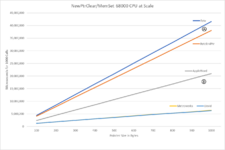

Here are the results for a 68000 SE SuperDrive with System 7.0.1. A single buffer is allocated, and then each routine is called 1000 times for a given length, the time is recorded, and the length is increased. The upper far right of this graph says that the MemorySetByteCounter algorithm ("Byte") took almost 4.5 seconds (4.5 million microseconds) to clear a 99 byte long buffer 1000 times in a row. Or, about 4.5 milliseconds per call.

A) For very small pointer lengths, the simple byte and word routines are much faster than the fancy Metrowerks and David routines. Less setup overhead per call.

B) David's routine switches to long writes at 19 bytes. Metrowerks waits until 32 bytes.

C) Writing a byte and decrementing a counter ("Byte") is slightly slower than writing a byte and doing an address comparison ("ByteEndPtr"). My teachers would be proud.

D) Apple's word clearing algorithm is definitely an improvement over a single byte. It is faster than the fancy algorithms until about 32 bytes.

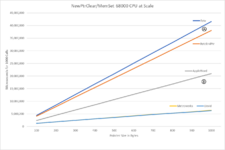

Let's try longer pointer lengths.

A) The distance between the "Byte" and "ByteEndPtr" algorithms is widening. That means the we are definitely saving cycles in the loop, rather than in setup/overhead portions of the call that would stay static as pointer size changed.

B) The fancy algorithms are almost three times faster than Apple's NewPtrClear ("AppleWord") and just as much again faster than byte based algorithms.

I cannot test misaligned memory clearing performance on the 68000, because Apple's algorithm crashes. Spoiler alert: so does Metrowerks!

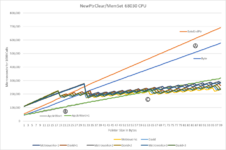

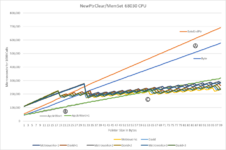

But, we can test aligned and misaligned memory on a 68030. In this case, a 68030 IIsi with System 7.6.1.

A) What??? My end-pointer check ("ByteEndPtr") is significantly slower than a bytes-remaining check ("Byte") on a 68030. This is the opposite of the 68000. My teachers would be confused.

B) Aligned and misaligned word writes ("AppleWord", "AppleWord+1") are basically identical on a 68030. No performance hit. That's unexpected.

C) Word writing algorithms ("AppleWord", "AppleWord+1") are relatively faster on a 68030. That is, the algorithm is now faster than the fancy version up to around 64 bytes, as opposed to 32 bytes on the 68000.

So, if you performance tuned assembly language code for a 68000, you were frustrated when the 68030 came out.

Let's try longer pointers.

A) Hmmmm. Metrowerks' memset performance on misaligned memory ("Metrowerks+") is slower than aligned ("Metrowerks"). But, their code aligns memory at the start. How could it not be the same performance?

Look at my comments ("DAC") above. Although the Metrowerks code aligns memory at the start, it is missing a line for 68K processor to copy that pointer over. As such, the Metrowerks memset() routine (and other code based on it) when working with odd memory addresses on a 68K CPU does the following:

1. Crashes on a 68000

2. Doesn't clear the end byte(s), which can result in a crash or bad behavior on all 68K CPUs due to lack of initialized structures.

3. Is up to 50% slower on a 68020/68030.

This bug exists from at least Gold 11 up to Pro 5. I did not check later versions.

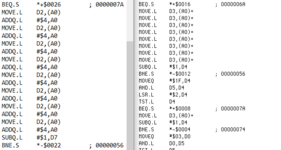

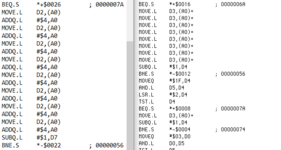

What about earlier versions of CodeWarrior? Source code does not appear to be included in Gold 7, but the disassembly is significantly different. I didn't step through the code, because the first thing I noticed is it is slowed due to poor compiler optimization. The left side (Gold 7) shows two instructions (MOVE.L D2,(A0), ADDQ.L #$4,AO) for every single instruction (MOVE.L D3,(A0)+) on the right side (Gold 11). The ADDQ.L consumes 8 extra cycles for each clear. Blech.

It seems 68K performance and bugs was not a focus of Metrowerks as early as 1995. And, a nasty 68K bug was introduced as early as 1996 and never(?) fixed.

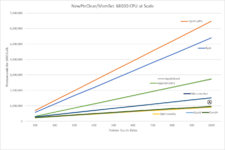

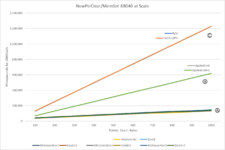

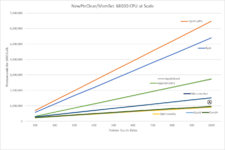

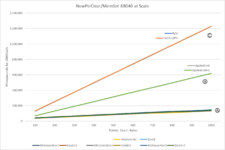

Enough of that. Let's move on to clearing performance on a 68040. Specifically, a Quadra 650 with System 7.6.1.

A) The two methods of checking for the end of a byte loop are now the same performance (less variable initialization overhead) on a 68040.

B) Apple's word clearing routine is now somewhere in the middle of relative performance, breaking even with the fancy algorithms around 40 bytes, rather than 32 or 64.

For bigger pointers?

A) Address memory alignment no longer matters. Something about the 68040 allows 32-bit writes on uneven addresses to not slow down the processor. Cool.

B) The fancy algorithm has improved to 4.4x speed over the Apple word algorithm at lengths of 1000 bytes.

C) The fancy algorithm is 10x faster than writing a byte at a time at lengths of 1000 bytes.

Conclusions

1. Contrary to Inside Macintosh, the Apple NewPtrClear algorithm writes two-bytes at a time. It is the most efficient or competitive algorithm on all 68K CPU variants until around 64 bytes. It turns out that most NewPtrClear calls are erasing small structs (instead of initializing inner variables one at a time), so a patch would not likely improve many programs.

2. If your code needs to routinely clear blocks larger than 100 bytes, then writing 32-bits at a time is definitely worthwhile.

3. The 68K CPUs went through substantial performance changes in instructions and memory access, such that code optimizations for one could hurt others. The 68040 seems to have made everything performant. Therefore, except under very specialized circumstances, you should write stable maintainable C code rather than risking defects with overly optimized algorithms.

4. Except for the earliest releases, do not trust Metrowerks support for 68K. Double check their code. Other than a handful of LC/Performa models, Apple had discontinued all 68K computers by 1996 (CodeWarrior 8) and PowerPC models were in their second generation. Metrowerks was looking toward the future rather than testing for backwards compatibility.

- David

I ran across an interesting note in Inside Macintosh Memory about the performance of NewPtrClear. It is unusual that documentation would use the word "Currently" instead of "As of System 7.1". Additionally, it is unusual that is says "large block" instead of "blocks larger than 64 bytes" or something specific.

This is a great opportunity to patch the OS to boost the speed for all programs! Or is it?

First, let's see what the ROM source code looks like. What a minute. It is clearing two bytes (Clr.W) at a time. Inside Macintosh claims "one byte" (Clr.B) at a time.

Note: Apple's code relies on the fact that handles and pointers are always allocated by the OS on even address boundaries and with an even number of bytes. The OS always returns a pointer on an even address, and if you ask for 17 bytes, you'll a receive a 17 byte pointer but the 18th byte will remain unused. This is because the 68000 CPU will crash if you attempt to write a word to an odd address. And, in Apple's code, writing only words (not bytes) means an even number of bytes will always be cleared. So, Apple's code is not safe to use for clearing arbitrary addresses and sizes -- it is only safe for this specific purpose.

Let's allocate a large pointer on a Macintosh SE to give us time to manually interrupt into MacsBug to check if this is the actual shipping implementation. Yup. As of System 7.0.1 and the SE SuperDrive ROM, the code definitely clears a word at a time and the ROM code is being used (not an improved System routine).

Let's check that on a IIsi with System 7.6.1. Yup. Same code. So, as early as 1989 (SE FDHD ROM) and as late as 1997 (7.6.1) Apple was clearing a word (16 bits) at a time on 68K Macs.

I searched online for better clearing routines in C. They follow this pattern:

1. Align the pointer to an even 4-byte address, clearing initial bytes as necessary. Aligning the address prevents crashes on some CPUs, but more importantly also writes across the entire 32-bit bus at once to all parallel memory chips. Addresses not on 4 byte boundaries split 32-bit writes into 24/8, 16/16, or 8/24. We want 32/0.

2. As much as possible, write a bunch of longs in a row (like 8) before looping to decrease the loop cost. For example, 8x4bytes=32 bytes per loop. This is called "loop unrolling".

3. As much as possible, write a single long (4 bytes) per loop with whatever remains.

4. Write a single byte per loop for the remainder.

Metrowerks code will be presented later to demonstrate this algorithm.

FYI. Most clearing code is actually based on the memset() standard C library code, where we set the value to zero.

For fun, let's compare the performance of writing a single byte at a time (decrementing byte count), a single byte at a time (looking for the final pointer address), Apple's code, Metrowerks standard library code (decrementing byte count), and my own code (looking for the final pointer address).

For code speed sake, we are going to assume no nil pointers or numberOfBytes<=0. Normally we would check more carefully at the start of the routine. The major difference between the two methods below is that one decrements the number of bytes remaining and the other compares two addresses. I was raised on address comparisons.

Note: Yes, the second routine could be slightly faster with a do-while. But I just saw that and I'm not going to rerun all my tests.

Graphs

For all graphs presented, smaller values are better as the Y axis is time taken.

Here are the results for a 68000 SE SuperDrive with System 7.0.1. A single buffer is allocated, and then each routine is called 1000 times for a given length, the time is recorded, and the length is increased. The upper far right of this graph says that the MemorySetByteCounter algorithm ("Byte") took almost 4.5 seconds (4.5 million microseconds) to clear a 99 byte long buffer 1000 times in a row. Or, about 4.5 milliseconds per call.

A) For very small pointer lengths, the simple byte and word routines are much faster than the fancy Metrowerks and David routines. Less setup overhead per call.

B) David's routine switches to long writes at 19 bytes. Metrowerks waits until 32 bytes.

C) Writing a byte and decrementing a counter ("Byte") is slightly slower than writing a byte and doing an address comparison ("ByteEndPtr"). My teachers would be proud.

D) Apple's word clearing algorithm is definitely an improvement over a single byte. It is faster than the fancy algorithms until about 32 bytes.

Let's try longer pointer lengths.

A) The distance between the "Byte" and "ByteEndPtr" algorithms is widening. That means the we are definitely saving cycles in the loop, rather than in setup/overhead portions of the call that would stay static as pointer size changed.

B) The fancy algorithms are almost three times faster than Apple's NewPtrClear ("AppleWord") and just as much again faster than byte based algorithms.

I cannot test misaligned memory clearing performance on the 68000, because Apple's algorithm crashes. Spoiler alert: so does Metrowerks!

But, we can test aligned and misaligned memory on a 68030. In this case, a 68030 IIsi with System 7.6.1.

A) What??? My end-pointer check ("ByteEndPtr") is significantly slower than a bytes-remaining check ("Byte") on a 68030. This is the opposite of the 68000. My teachers would be confused.

B) Aligned and misaligned word writes ("AppleWord", "AppleWord+1") are basically identical on a 68030. No performance hit. That's unexpected.

C) Word writing algorithms ("AppleWord", "AppleWord+1") are relatively faster on a 68030. That is, the algorithm is now faster than the fancy version up to around 64 bytes, as opposed to 32 bytes on the 68000.

So, if you performance tuned assembly language code for a 68000, you were frustrated when the 68030 came out.

Let's try longer pointers.

A) Hmmmm. Metrowerks' memset performance on misaligned memory ("Metrowerks+") is slower than aligned ("Metrowerks"). But, their code aligns memory at the start. How could it not be the same performance?

Look at my comments ("DAC") above. Although the Metrowerks code aligns memory at the start, it is missing a line for 68K processor to copy that pointer over. As such, the Metrowerks memset() routine (and other code based on it) when working with odd memory addresses on a 68K CPU does the following:

1. Crashes on a 68000

2. Doesn't clear the end byte(s), which can result in a crash or bad behavior on all 68K CPUs due to lack of initialized structures.

3. Is up to 50% slower on a 68020/68030.

This bug exists from at least Gold 11 up to Pro 5. I did not check later versions.

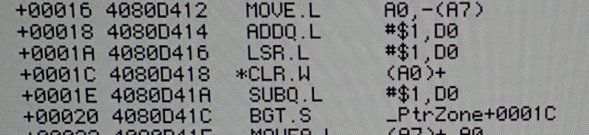

What about earlier versions of CodeWarrior? Source code does not appear to be included in Gold 7, but the disassembly is significantly different. I didn't step through the code, because the first thing I noticed is it is slowed due to poor compiler optimization. The left side (Gold 7) shows two instructions (MOVE.L D2,(A0), ADDQ.L #$4,AO) for every single instruction (MOVE.L D3,(A0)+) on the right side (Gold 11). The ADDQ.L consumes 8 extra cycles for each clear. Blech.

It seems 68K performance and bugs was not a focus of Metrowerks as early as 1995. And, a nasty 68K bug was introduced as early as 1996 and never(?) fixed.

Enough of that. Let's move on to clearing performance on a 68040. Specifically, a Quadra 650 with System 7.6.1.

A) The two methods of checking for the end of a byte loop are now the same performance (less variable initialization overhead) on a 68040.

B) Apple's word clearing routine is now somewhere in the middle of relative performance, breaking even with the fancy algorithms around 40 bytes, rather than 32 or 64.

For bigger pointers?

A) Address memory alignment no longer matters. Something about the 68040 allows 32-bit writes on uneven addresses to not slow down the processor. Cool.

B) The fancy algorithm has improved to 4.4x speed over the Apple word algorithm at lengths of 1000 bytes.

C) The fancy algorithm is 10x faster than writing a byte at a time at lengths of 1000 bytes.

Conclusions

1. Contrary to Inside Macintosh, the Apple NewPtrClear algorithm writes two-bytes at a time. It is the most efficient or competitive algorithm on all 68K CPU variants until around 64 bytes. It turns out that most NewPtrClear calls are erasing small structs (instead of initializing inner variables one at a time), so a patch would not likely improve many programs.

2. If your code needs to routinely clear blocks larger than 100 bytes, then writing 32-bits at a time is definitely worthwhile.

3. The 68K CPUs went through substantial performance changes in instructions and memory access, such that code optimizations for one could hurt others. The 68040 seems to have made everything performant. Therefore, except under very specialized circumstances, you should write stable maintainable C code rather than risking defects with overly optimized algorithms.

4. Except for the earliest releases, do not trust Metrowerks support for 68K. Double check their code. Other than a handful of LC/Performa models, Apple had discontinued all 68K computers by 1996 (CodeWarrior 8) and PowerPC models were in their second generation. Metrowerks was looking toward the future rather than testing for backwards compatibility.

- David