Hello Mac community,

since I have gotten my first actual Macintosh last month (LC475), I must admit that the "Mac Bug" has bitten me, which is why I have now also pushed the trigger on getting a Macintosh SE/30 yesterday.

In order to have fun with it, I decided to port one of my other Amiga projects to the SE/30, therefore, I would like to announce today that I have started to develop a new graphics card for the SE/30 called:

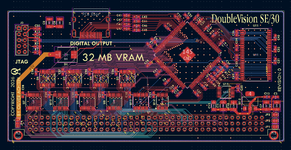

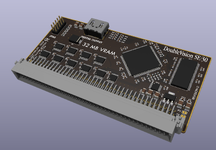

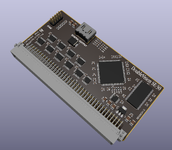

DoubleVision SE/30

Technically, it is very much a Macintosh port of my Amiga graphics card, the P-Vision, but with some Mac SE/30 specific feature enhancements.

So what can it do? Well, here is the feature List:

The card itself is low-profile and it has an internal HDMI port, which can be put outside of the SE/30 via a small bracket.

As I said, most of the development is already done, as the designs works great on the Amiga, therefore I expect that the most challenging part for me is to write the graphics ROM driver for the MAC in order to enable the display output. I have already started to look into this direction. ;-)

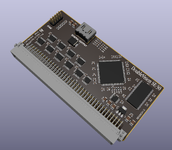

So for now, let's start with a first render of the board PCB, which I expect will be nearly identical to the final PCB.

As always, questions, comments, etc are always welcome.

since I have gotten my first actual Macintosh last month (LC475), I must admit that the "Mac Bug" has bitten me, which is why I have now also pushed the trigger on getting a Macintosh SE/30 yesterday.

In order to have fun with it, I decided to port one of my other Amiga projects to the SE/30, therefore, I would like to announce today that I have started to develop a new graphics card for the SE/30 called:

DoubleVision SE/30

Technically, it is very much a Macintosh port of my Amiga graphics card, the P-Vision, but with some Mac SE/30 specific feature enhancements.

So what can it do? Well, here is the feature List:

- HDMI video plug using DVI video signalling

- 32MB VRAM framebuffer, clocked at 165MHz

- Supports 1bit,2bit,4-bit,8bit,15bit,16,bit and 32bit color depths

- All standard VGA and MAC resolutions, supporting up to 1280x1024@60p AND 1920x1080@30p

- Fast 64-bit BitBlt engine, pushing up to 130 Mio Pix/s (8bpp)

- 32 KB internal ROM for graphics drivers

- Firmware can be fully upgraded on the host system (via "FlashFPGA App" running on MacOS)

- Special MAC SE/30 features:

- Card can work in dual-mode (second monitor to the SE/30 internal monitor) or route the SE/30 internal video output to HDMI

- If MAC is booted, and no HDMI is connected, framebuffer memory is added as 32 MB system memory

- Eleborate write-through cache to minimise read/write latency to VRAM

- Makes full use of 68030 2-cycle 32-bit synchronous bus termination - yielding up to 32MB/s host memory performance using 16MHz bus!

The card itself is low-profile and it has an internal HDMI port, which can be put outside of the SE/30 via a small bracket.

As I said, most of the development is already done, as the designs works great on the Amiga, therefore I expect that the most challenging part for me is to write the graphics ROM driver for the MAC in order to enable the display output. I have already started to look into this direction. ;-)

So for now, let's start with a first render of the board PCB, which I expect will be nearly identical to the final PCB.

As always, questions, comments, etc are always welcome.