A P5200 is just a P6200 with extra commitments / more baggage.

A P5200 is just a P6200 with more swivel.

@David Cook

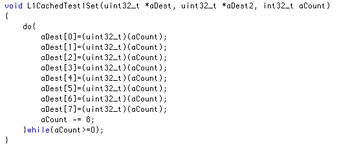

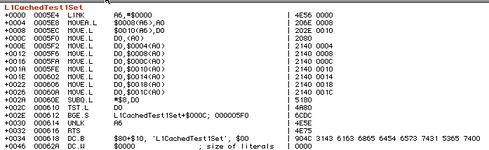

@David Cook I think the app as it currently is (or how you've modified it) is worth testing on the P6200 and P630 to see what the results are, if it's no inconvenience to get them out. Given that DRAM is so much slower than cache, I'm not yet sure how to properly distinguish the '040 bus speed in software from DRAM speeds. I'd need to write 2x or 4x 32-bits at a time instead of 8x 32-bits. One way would be to fill all the L1 cache lines, then any further write would cause a flush. Writing 4x 32-bits to Valkyrie would fill its transaction buffers and then I'd need to pause for them to empty and then repeat. So, the pause would depend upon the '040 bus speed and the Valkyrie write speed (which is also a function of the CPU clock speed).

The real question is: if the bandwidth of VRAM writes is < the bandwidth of the bus how do you estimate the bandwidth of the bus?

I think the solution is to perform 4 writes to VRAM (which depends upon the bus bandwidth) then wait long enough for the transactions to complete. For example, let's say the P6200 runs at 37.5MHz. We write 4 words, which is 2 x 64-bit words, so there's a single word write to the latches, and then the CPU bus has to wait for the '040 bus transaction to complete, then the latches can be filled with the next 64-bits and the CPU bus is free. The key thing is there is a single '040 bus transfer + a 128-bit (8-byte) transfer on the 603 bus+ a wait. This is tQw.

Similarly, if we have a 64-bit write then a pause, we incur a 0 '040 bus transfer + a 64-bit (4-byte) transfer on the 603 bus + the wait and because the burst transfers all take 1 cycle per written double-word, the 603 bus is stalled for 1 less cycle. This is tDw. tQw-tDw is the number of 603 CPU bus cycles taken for the first 2 words to be transferred on the '040 bus.

In the worst case, a 25MHz bus, each Quad word takes 5*3=15 CPU cycles, but the important bit is the first 64-bits which will take 3*3=9 CPU cycles, but I guess this would be rounded to 10 CPU Bus cycles. I don't think the 33MHz '040 bus case makes sense as it involves the '040 bus running 25/11 x slower than the CPU bus. However, the critical double-word will therefore be 3*25/11=6.818, 7 cycles, rounded to 8 cycles i.e. 4 CPU bus cycles. If the '040 bus ran at 37.5MHz, it matches the CPU bus which is neat. The critical double-word will be 3*2=6 cycles, or 3 CPU Bus cycles.

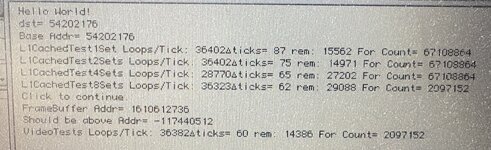

This means we need a test which can distinguish between 5, 4 and 3 CPU bus cycles over a period much longer than that. My timing mechanism is accurate to a couple of % over a 1s period. If the bandwidth for VRAM is 7.5MB/s; 1.875MW/s, that's a massive 88 cycles per word to wait, or 354 cycles for 4x long words. So, if I round to a delay of 400 nops that's probably OK.

If I do 100M loops, then the difference between a single cycle will amount to a measurable amount of time at both 166MHz, 36 ticks. I just need to work out how to add a NOP in Code Warrior. Each loop is then: { Write 2 (or 4) words to non-cacheable VRAM, wait 400 nops }. This will be a PPC only test. Thank goodness I'm not making assumptions within this test

!