NuMac Emulation Progress & The MiniMixedModeManager (MinxedMan)

So, I've made a bit of progress on the NuMac Emulation. I've built a new copy of the PCE Mac Plus emulator, but more importantly, I now have a working M88K gcc compiler (3.3.6) via the GXemul emulator, which emulates the Luna88K, one of the few Motorola 88K computers. I can also transfer data in and out of it via an MSDOS FAT disk image.

It's a bit crude, obviously. I had tried some other approaches and I'll take you through a bit of that pain first and why I ended up doing it this way.

GCC supported the m88k fairly early on and at the time was one of the important, new architectures that made gcc more generically portable and therefore more usable, primarily because it was the only alternative to the official (non-free) Motorola compiler. I thought gcc still supported the m88k, but in reality they dropped support after version 3.3.6, which was sometime in the Noughties. I only know all this because someone is building a

M88K LLVM back-end.

I grabbed V3.3 from GitHub (I'm not sure if it was 3.3.6) and binutils 2.16. I struggled to get it built on my Mac mini under macOS Catalina (Gcc 4.7 or so?): the source kept failing to

#include <stdlib.h> because that was dependent on some defines that weren't defined under my macOS environment after

./configure some_parameters and even after I sorted that it then complained at the link stage because it didn't seem to be able to link in

.dylibs. So, then I thought, OK, I'll install Linux as a VirtualBox.org VM on my Mac. I need to learn quite a bit more about virtual machine environments, so it's a good education.

I got a bit further with that - I could compile binutils 2.16 for m88k-coff (but not m88k-elf), but I couldn't compile gcc 3.3 for either (m88k-none-coff nor m88k-none-elf). So, if anyone knows how to do that from source, or even how to do it under macOS Catalina, then that'd be welcome. I used:

https://www.niksula.hut.fi/~buenos/cross-compiler.html general process for compiling, but I also used a page specifically about compiling gcc for macOS.

Because I wasn't quite sure whether I needed a yet earlier version of gcc and if so what binutils was compatible and what the object format was, I figured that it was better to go the emulation route, because then I'd be able to find out directly. And that turned out to be fairly easy. The GXemul emulator is small (just 6MB of compressed source); simple & simple to configure and make; it was pretty simple to run it with a pre-built openBSD image and once I created a FAT16 disk image without any partitions using DiskUtil, I could get GXemul to mount it and so now I can transfer data in and out.

So, now I can start work on compiling PCE's 68K emulator (or another standalone, more efficient 'C' emulator if I find it). I'll need to add some basic code for booting (but the M88K really does boot from 0), but the emulation environment itself is relatively simple if I ignore M88K exceptions and run it with the MMUs off. I still have to map M88K interrupts to 68K interrupts, but I don't think that's too hard. It means I could in theory support System 6 or System 7, since I'll be emulating 24-bit mode code.

Now onto the next bit:

The MiniMixedModeManager (MinxedMan)

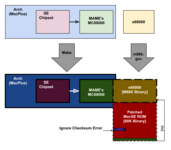

The PPC Mixed Mode Manager is actually fairly complex, far more complex than I would be able to recreate for the M88K demo. For 100% 68K emulation (the initial case) it's not too bad, because we won't be able to execute native code at all! But as soon as we can execute native code, even in terms of M88K pre-built libraries as proposed earlier, some kind of mini-mixed-mode manager is needed.

To make this feasible, at a hobby development level, MinxedMan needs to grossly simplify the model. That's because the PPC mixed mode manager probably did take a number of years to be fully comprehensive (i.e. recreating all the new Toolbox headers) and I can't spare that kind of time to reimplement the same system (even if I could use the same headers, though that's a thought). Also, the NuMacs are intended to be earlier systems, so they would be less complex anyway. Also, there are always multiple engineering solutions to any one problem with different trade-offs and although the only thing I initially want to demonstrate, is being able to execute pure native functions (leaf-functions that don't switch back to the emulator), I need to consider what the model would be if it was comprehensive.

A while back I figured that an easy way to implement 68K to M88K mode switches would be to place odd jump addresses in the jump table. the jump table is set up by the segment loader, so for M88K native code support the M88K segment loader is changed to look for corresponding

CdeR code resources, and substitute them, filling in odd jump addresses as appropriate.

The 68K emulator would generate an address exception; and the exception would generate the mode switch. That would work, because the M88K can generate alignment errors on 16-bit and 32-bit data loads from odd addresses and of course, all 68K code is just data to the M88K. So, this kind of Mixed mode manager can use the odd address technique to switch from 68K to M88K, but (from what I've read), calls or jumps to odd address just zero the bottom 2 bits, so it doesn't matter (there's no unaligned address exception for code on the M88K).

However, it turns out there's a better way by using the M88K's MMU (in both VM and physical addressing mode). Let's consider how it works with VM on. The technique is simply that an M88K routine placed in the jump table (or as a ToolBox routine) would cause a page fault if the emulator tried to interpret it, because it would have to be execute-only on the M88K. And similarly, a 68K routine placed in the jump table (or ToolBox) would cause M88K execution to page fault for the opposite reason: it can't jump to 'code' in a data page. So, we diagnose the page fault to determine if we need a mode switch.

So, you don't need unaligned function pointers - bit 0 is marked safe!! You just need to assign M88K

CdeR segments in application code space.

This technique would be very versatile: you wouldn't

necessarily need UPP routine descriptors and it could work for all ISA switches for callbacks, VBLs, Toolbox patches etc. There's still the issue of mapping the ABI (which routine descriptors handle too) for the different ISAs, but I'll come to that shortly.

There's two significant downsides to the technique:

- The application heap per application would necessarily be potentially quite a bit bigger for Mixed-mode applications, because all CdeR resources have to be on distinct physical (and virtual) pages and especially because in this system there's no file mapping. This, in fact can be largely solved fairly easily using what I'm calling Logical Memory.

- In the PPC mixed-mode manager, there's a lot of SDK (and OS) support to make it easier to write PPC applications and convert them (or partially convert) to PPC or write applications that can compile transparently for both targets (hence PPC comments in the Would like to development a new THINK Pascal App for 68K topic). In this system, because of the use-cases and practicality, relatively little M88K code would be written. So, instead a higher burden is put on the application developer. I don't intend to fix this problem.

Logical Memory

So, the way to overcome the extra physical memory resource usage is to use the MMU regardless of whether VM is turned on or off. The MMU is on in either case, which may be the case for 68030 and 68040 Macs even when VM is turned off, because it's very handy for remapping memory arbitrarily (e.g. covering up gaps in the memory map), though it doesn't really matter if that's not what the ≥68030 ROM and System 7 behaviour does, the M88K can do it. When VM is off, there's a logical to physical translation where the logical address space = the physical address space (+ extra entries to handle I/O and ROM space).

In 680x0 System 7 VM, as far as I understand, the logical address space is organised the same way as physical memory is when VM is turned on, except the logical address range is the size of your VM swap and physical pages are a cache of the current virtual pages (except for the system heap which must be in physical memory).

The trick here is that even if all these rules apply, your logical address space can be

larger than your virtual address space (i.e. swap space). For example, your VM manager could allocate applications on 1MB boundaries in the logical address space leading to an average of 0.5MB gaps between applications (or more if the average application size was <0.5MB). And this is OK as long as the total number of logical pages actually used < the VM memory size. To take a very trivial example, let's say the VM memory size is 8kB (2 pages) and we have two tiny 4kB apps: Hello and Bye. Hello is loaded first and is assigned the logical address space 0x300000 to 0x3FFFFF, but its SIZE resource says just 4kB so it's mapped to the first VM page. Bye is loaded second and is assigned the logical address space 0x400000 to 0x4FFFFF, with SIZE=4kB and therefore mapped to the second VM page. There are big logical address gaps that just can't be used by the applications. The page directories and tables would reflect this: entries that are invalid.

So, logical Address space ≥ Virtual address space ≥ Physical address space.

So, the technique for mixed M88K / 68K apps is to play around with the logical application heap size so that M88K CdeR segments can be kept all together (and thus save space by not being aligned on 4kB pages); and also kept separate from 68K CODE segments.

A mixed 68K / M88K application could do this as follows. It defines a minimal and preferred SIZE resource as per normal, but it also internally defines minimal and preferred SiZEs for 68K and M88K code requirements for the M88K execution environment. For example, a pure 68K application might need at least 256kB for code in the application heap, though there's 384kB of code, while the mixed environment might need 192kB for 68K code and from 64kB to 128kB for M88K code (giving a total of 512kB). So, if you're running it on an M88K then the logical space assigned for M88K code is 128kB (which could be assigned after the Master pointers or perhaps even before), and the 68K application heap space follows.

Because the M88K code is in its own logical space, its code segments can be purged (down to 64kB) to make room for 68K code (e.g. UI code) and vice-versa (e.g. for some high-speed rendering) without the total exceeding the application heap size at any one point and without M88K code segments colliding with 68K code segments or without M88K code segments wasting parts of 4kB pages.

Consider a case where M88K needs to load a code segment, but the application heap is 'full', and contains M88K or 68K code and data resources that can be purged. The memory manager performs basically the same actions as normal: it would first purge resources for its own ISA (in this case purging code segments not in the current call stack) and if there's still not enough space for the code segment, reallocating any possible fragmented logical pages, then if there's not enough space applying the same algorithm to 68K code segments (or other resources), until space is found for the needed M88K code segment. Thus the memory manager and segment loader has the same kind of behaviour, but is slightly more flexible in that it can reduce some wasted memory due to fragmentation.

It's the programmers' job to make sure the application still functions given these, more fluid constraints and there's a bit of extra VM complexity, possibly an extra layer of page mapping, but that's small (I think) compared with a more comprehensive PPC-stye mixed mode manager. It would handle VBL and Time Manager tasks and callbacks the same way (you lock code segments at the top or bottom of the application heap(s) as needed).

There's one more thing to take into account: ABI differences. This technique doesn't have the same kinds of UPPs or Routine Descriptors to handle conversions, but the same issue still exists. The solution here is that for routines that can be called from a different ISA, the programmer provides the conversion by writing a function that handles it:

C:

typedef void (*MixProc)(void);

tMyReturnType MyFuncM88K(char *aSrc, char *aDst, uint32_t aLen);

typedef tMyReturnType (*tMyFunc)(char *aSrc, char *aDst, uint32_t aLen);

void MyFuncM88KAbi(t68KRegs *aRegs, MixProc aProc);

// A manually defined routine descriptor.

const MixProc gMyFuncMix[2]={

(MixProc)__asm("bsr %0",&MyFuncM88K),

(MixProc)&MyFuncM88KAbi

};

// Now the function looks like a proper function, but it's not.

#define MyFunc(char *aSrc, char *aDst, uint32_t aLen) (*(tMyFunc)(gMyFuncMix))

/**

* M88K to M88K call, indirectly via MyFuncMix

* or just directly.

*/

tMyReturnType MyFuncM88K(char *aSrc, char *aDst, uint32_t aLen)

{

// action blah blah blah.

return myReturnValue;

}

/**

* Programmer has to manually translate the ABI.

* Mostly easily by debugging the 68K version to see

* how all the parameters are allocated.

*/

void MyFuncM88KAbi(t68KRegs *aRegs, MixProc aProc)

{

tMyReturnType ret;

ret=(*aProc)(aRegs->a[0], ((*long)aRegs->a[7])[1], aRegs->d[0]);

aRegs->d[0]=ret; // return ret in d0.

aRegs->a[7]+=sizeof(long); // deallocate stack.

}

Obviously you'd factor common ABI translations. The way it works is that when the MinxedMode manager is invoked, it calls the second entry instead of the first (which is always 4 bytes later), passing the other ISA's context and the target routine address as the parameters. The second entry packages up the other ISA's parameters, then calls the target routine. Note, because the target routine is passed in the second parameter, the same Abi converter can be used for different routines where the same conversion is needed.

I think that covers this concept. I'll have goofed up some details about the SegmentLoader, Memory manager and Mixed Mode Manager, but I think the concepts are probably sound and I'll correct errors in due course (likely, assuming people read this and see obvious errors).

Thanks for trawling through this ongoing fiction!