-

Hello MLAers! We've re-enabled auto-approval for accounts. If you are still waiting on account approval, please check this thread for more information.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Powerbook 540c LCD, Can It Run In Grayscale Mode?

- Thread starter Paralel

- Start date

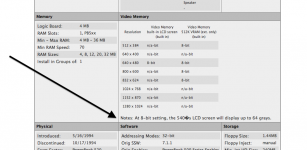

This isn't the definitive, because the model # is one digit off, but if you Google for the datasheet for the Sharp color LCD panel's part number listed in the parallel thread to this you'll find one for a panel that appears to be identical and that datasheet says it's 12 bit (4 bits per channel) color. It's also worth noting that the "Service Source" hardware manual for the 500 series repeats that "16 greys" claim.

On the flip side, the "Developer Note" for the 500 series has a confusing section talking about the CLUT's 16 bit color mode and explains how they've especially optimized yadda yadda blaw blaw blaw so the video system will avoid attempting to display certain colors which don't render properly on the LCD, with that the end result is that the passive color displays can show a range of "about 4000" colors, while the range for the TFT displays is "around 24,000".

(Unfortunately the manual doesn't actually have the pinout of the LCD connector so we can't count data pins.)

In any case, fretting about exactly how many greys an 18 year old laptop does or does not display is probably a little silly in the grand scheme of things.

On the flip side, the "Developer Note" for the 500 series has a confusing section talking about the CLUT's 16 bit color mode and explains how they've especially optimized yadda yadda blaw blaw blaw so the video system will avoid attempting to display certain colors which don't render properly on the LCD, with that the end result is that the passive color displays can show a range of "about 4000" colors, while the range for the TFT displays is "around 24,000".

That latter number is bigger than 12 bits, which implies that even *if* the LCD's interface is only 12 bit the video hardware may do some sort of time-based multiplexing/dithering to increase the available color range (resulting in the "flicker"?). Perhaps that's the source of the "64 grey" claim, *or* perhaps whoever typed that up confused something between the LCD's capabilities and the external video port. (15/16 bit "highcolor" DACs, which is what the developer note says the CLUT is part of, usually only had 6 bit DACs.) All we really know is the video hardware *logically* supports "256 greys".The display controller IC contains a 256-entry CLUT. Although the CLUT supports apalette of 32,768 colors, many of the possible colors do not look acceptable on the display.

Due to the nature of color LCD technology, some colors are dithered or exhibit noticeable

flicker. Apple has developed new gamma tables for these displays that minimizes flicker

and optimizes available colors. With these gamma tables, the effective range of the CLUT

for the active matrix color display is about 24,000 colors; for the DualScan color display,

the effective range is about 4000 colors.

(Unfortunately the manual doesn't actually have the pinout of the LCD connector so we can't count data pins.)

In any case, fretting about exactly how many greys an 18 year old laptop does or does not display is probably a little silly in the grand scheme of things.

It's almost worth starting "The Grayscale Thread" in Peripherals for this discussion!

I've heard it said that the human eye can't distinguish more than 256 shades of gray, but I've often wondered about that. Is it possible to define 512 distinct gradations of gray in a 24bit color graphic? If so, it would be interesting to do that and check it against a matching 256 level grayscale chart in the top half of a comparison file. Looking for a difference in the banding between top and bottom halves of the graphic might prove informative.

I've heard it said that the human eye can't distinguish more than 256 shades of gray, but I've often wondered about that. Is it possible to define 512 distinct gradations of gray in a 24bit color graphic? If so, it would be interesting to do that and check it against a matching 256 level grayscale chart in the top half of a comparison file. Looking for a difference in the banding between top and bottom halves of the graphic might prove informative.

If the CLUT is capable of 32,768, then it's 15 bit, meaning there are 32 shades of gray at most. Assuming it is most likely a 12-bit panel, it can only truly show 16, so it puts it on par with the 520, just being active matrix. The active matrix part along makes it worthwhile. This passive matrix stuff is driving me nuts.

Not true greys, no. You could certainly make an image that included the 256 real greys alongside another 256 shades in which the color values for RGB are not exactly the same. (IE, in addition to using #000000 through #FFFFFF you could add some arbitrary stripes where you subtract 1 from one of the RGB channels, IE, #FEFFFF.) But by definition those extra "greys" will actually be subtly "sepia toned". WHICH IS CHEATING.Is it possible to define 512 distinct gradations of gray in a 24bit color graphic?

They do sell video cards and monitors capable of 30 bit color now, so if you really want 1024 true greys you can have it. A setup to do it will run you around three grand. I have to admit I'm sort of curious if a double-blind test between one of those systems and an "equally high quality" IPS panel+24 bit card would really show that humans can tell the difference between 16 million and one billion colors.

The misleading part is the resolution of the CLUT doesn't necessarily tell you the resolution of the DACs. ***Using a CLUT for 15 bit color is actually unusual... (*** EDIT: Rest of this thought deleted. Glancing again at the piece of the manual I quoted I see the CLUT in this Powerbook doesn't do 15 bit indexed color, its palette register is 8 bits long. Derp. In my defence, the verbage of the service manual is nonstandard, since it says "CLUT" to refer to what in a VGA card would be the entire "RAMDAC", of which the CLUT is only a part, aka, the palette register. Anyway...) All PC "highcolor" VGA cards that did 15 or 16 bit color, aka, "Thousands of colors" in Mac terminology, still had *at least* six bit ADCs in their RAMDACs, they just couldn't use the entire range when in the highcolor mode. (Which leads to the odd situation that you could actually have more true grays running in an 8 bit grayscale mode than you could in "thousands of colors" mode, because, as you note, the effective grayscale resolution is only 5 bit in "thousands of colors" but it's six or 8 bit in the indexed mode.)If the CLUT is capable of 32,768, then it's 15 bit, meaning there are 32 shades of gray at most.

But, again, DAC only applies to the external monitor port on a Powerbook. Given the evidence I'd wager that the color LCD in the 540c could do 16 "real genuine according to Hoyle" greys, but when you have it set for grayscale it just might use dithering to fake a higher number. IF someone has the part number for the greyscale LCD they should Google it and see if they can find a datasheet. It's worth a shot.

Hard to say. If the panel is 6-bit, and the CLUT can use 6 of the 8 bits in the register in grayscale mode, it is possible it could display 64 shades of gray.[attachment=0]Screen Shot 2013-08-29 at 7.56.33 PM.png[/attachment]

so does this mean they are telling a fib?

I don't think we'll ever know, since there are literally no search results returned for the part number of the 540 LCD.

Every now and then, I get a hankering for one of those huge Planar medical grayscale LCDs they use for displaying X-ray images. You can pick them up cheap, but alas they require a matching proprietary video card to drive them. Some ridiculous resolution like 2300x1600 @ 23" and presumably better than average gray distinction.

Interesting thought. I wonder if the "driver" side of things could be taken care of in one of the FPGA/CPLD based panel driver boards from Chinese ebay sellers.In principle you could get more shades of grey if instead of feeding a grayscale monitor conventionally you combined the outputs of all three D/As via a resistor network (or some other method) but you'd have to write a custom driver to make a sensible grayscale palette out of it.

I reinstalled the 540 panel in the 520, and in Control Panel > Monitor > Options it actually says "640x480, 64 grays" so that footnote on the specifications page is correct. Apparently the LCD in the 540 is a true 6-bit panel.

What does it say under Control Panel > Monitor > Options for the 540c?

What does it say under Control Panel > Monitor > Options for the 540c?

The "Driver" issues would actually be OS and application related. Let's say that you were to, via whatever method, modify a video card so it supported something ridonkulous like 24 bit grayscale. (Most accurate way I can think of, assuming we're going oldschool and trying to do this with an analog monitor output, would be to make a board that had three very-high-speed 8 bit analog-to-digital converters on one side and a single 24 bit DAC on the other, connected to the single input on a true monochrome monitor. Digitize the incoming RGB signals into three bytes of data, stack them up, and use that on the output side as a single 24 bit value. Easy Peasy, relatively, The simplest method would be to simply use a different value resistor on each RGB line to combine them into one signal; see the comment below as to why from a practical standpoint the results would probably be indistinguishable.) The problem now is that you'll have to describe to the OS that it should treat its 24 bit framebuffer as grayscale, IE, use the three bytes that were RGB into a linear set of luminosity values instead of three sets of luminosity+chroma; if you simply keep working with 24 bit images as 24 bit color you might be able to display some pretty pictures but they won't make a lick of sense if you view them on a normal machine. "Deep Color" support (IE, support for 30/36/48 bit color depths) has been in Windows for a few years but googling seems to indicate it's *not* really in OS X yet. (Oddly there *was* apparently a 30 bit deep color card made by Radius for Macs in the 90's that was supported by PhotoShop.). I have no idea if "Deep Color" support comes along with an understanding of extended grayscale only images, but it would seem to be a prerequisite for really making use of a deeper grayscale framebuffer.Interesting thought. I wonder if the "driver" side of things could be taken care of in one of the FPGA/CPLD based panel driver boards from Chinese ebay sellers.

Of course, one thing to think about is if you were doing this analog is the peak-to-peak voltage swing for a VGA (color or grayscale) monitor is only 0.7 volts. Even at 10-bit resolution the difference between two adjacently-shaded grayscale pixels is probably less than the voltage swings you'd get in the cable from, say, ripple from nearby AC feeds or the computer's power supply. 16 or 24 bit would be ludicrous overkill.

That doesn't actually surprise me. 6 bit grey resolution was what Mono VGA was capable of, it would make sense they'd manufacture panels that support it.Apparently the LCD in the 540 is a true 6-bit panel.

I'm sure with the color display the control panel says "256 greys". I would just wager that in practice they're dithered, not true.

What's interesting about this is that it means that the CLUT register is capable of either 256 colors (3.3.2.0.0), or, at a minimum, a 6-bit linear grayscale value (6.0.0). Considering grayscale values larger than a single color channel are possible in the register, technically, it should be possible for it to output true 256 grayscale values, with a true 8-bit grayscale panel attached to it. Of course, at that time, the most one ever saw was 6-bit panels. However, it would be fun to find a true 8-bit grayscale panel and see if the CLUT could drive it at the full 8-bit range. The OS is capable of handling 256 grays, so I don't see why not. A fun little hack to try. Unfortunately, far beyond my capabilities.That doesn't actually surprise me. 6 bit grey resolution was what Mono VGA was capable of, it would make sense they'd manufacture panels that support it.Apparently the LCD in the 540 is a true 6-bit panel.

I'm sure with the color display the control panel says "256 greys". I would just wager that in practice they're dithered, not true.

Testing the Toshiba panel for the 540c in Grayscale mode, it can display 27 distinct shades (Black, White & 25 shades of gray inbetween). This means it should be capable of displaying 19683 colors @ 640x400. So, Apple's estimate of 24000 isn't too far off. Since it can clearly display more than 16 shades, it must be a 15-bit panel. Really quite good for that time period.

So, the 540 panel is indeed superior to the color panels in displaying grayscale, since it is capable true 64 grayscale. However, this makes the 540c superior to any of the 4-bit grayscale panels.

Unfortunately, the 540 panels suffer from terminal tunnel vision, as do all grayscale active matrix panels of that time period.

I'd be interested in someone with a Sharp 540c panel testing the grayscale gradients in grayscale mode. Since the colors on the Sharp panel appear more vibrant, I expect it is capable of displaying the full 32 shades of gray.

So, the 540 panel is indeed superior to the color panels in displaying grayscale, since it is capable true 64 grayscale. However, this makes the 540c superior to any of the 4-bit grayscale panels.

Unfortunately, the 540 panels suffer from terminal tunnel vision, as do all grayscale active matrix panels of that time period.

I'd be interested in someone with a Sharp 540c panel testing the grayscale gradients in grayscale mode. Since the colors on the Sharp panel appear more vibrant, I expect it is capable of displaying the full 32 shades of gray.

It would be fantastic if mcdermd could do this quick test because I know for sure he has 540c's with both Tosh and Sharp Panels.

So maybe the people that purchased the 540 over the 540c might not been all about $$ savings, maybe they might of needed that screen for some B&W Desktop Publishing? Maybe the 540 was more expensive then that 540c< at the time?

So maybe the people that purchased the 540 over the 540c might not been all about $$ savings, maybe they might of needed that screen for some B&W Desktop Publishing? Maybe the 540 was more expensive then that 540c< at the time?

Similar threads

- Replies

- 8

- Views

- 2K

- Replies

- 30

- Views

- 6K