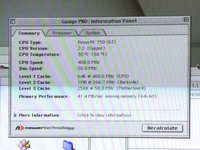

I wonder how the memory benchmarks compare to Gage Pro's Moving Memory Rate, which I never really looked into what exactly they were measuring or how, but it was always pretty much on par with the memory performance of my PPC systems.This one adds the dcbz test.

Renamed the previous tests to the names of the instructions they use.

There's a### ms = ### sline that compares the Microseconds timer to the time of day (seconds) timer. DingusPPC currently doesn't have a Microseconds timer that is related to real time yet. SheepShaver seems to have a closer match but I should test that with a different app that takes more time to get a more accurate test.

It did speed up if you interleaved the memory or added a G4.

As the moving memory rate went up, so did the PCI Bus performance, but that had as much to do with systems bus speed as it did ram.

Did PC's suffer from the slow PCI Bus performance of OWM's? When PCI 1.0 came out and PC's still used EDO or FPM RAM at 60ns or 70ns?

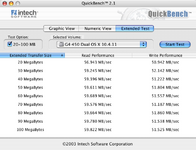

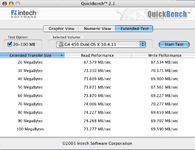

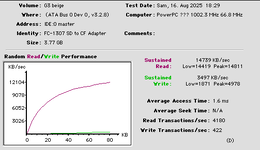

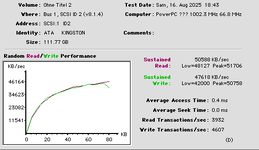

One of the benefits of this thread was to push the PCI Bus and see what real world throughput was on real Mac's.

I never had an OWM with better than a 500Mhz CPU, did the faster CPU's improve PCI Bus performance much if at all?

I clock chipped my Sonnet 450MHz G3 in my PM8600 with a 48.000MHz occelerator but I don't recall if I ever saw any gains on the PCI Bus.

It was a 3Mhz gain, to an under clocked Kansas board, because Sonnet wanted compatibility with the 45Mhz Bus Macs. Later all their upgrades went to 50Mhz chips anyways, I think.

I know some people have taken their boards to 60Mhz and one guy even took his PCI OWM to 66MHz PCI.

I wonder what the combination would yield in RAM and PCI performance with on of the 500MHz+++ G4 upgrades??

Could we get anywhere close to the 133MB/s max theoretical throughput of PCI?

Could we exceed it?

The best real world numbers I verified was the SiL3112 in the MDD with 91MB/s peak on the SSD benchmark.

That's pretty good for 33MHz PCI, and all the overhead of drives and controller cards.

If I remember correctly the 1.6Ghz G5 I have did slower than that with the same card and drive, I'll have to test it again to be sure.

The MDD is the 133Mhz bus version, so pushing it to 167Mhz or beyond may speed things up too.