By the time Commodore came out with the A3000 in the 1990s, is was undergoing Chapter 11 in the USA and dividing up the company in Europe. It was their mistake getting rid of Jack Tremiel who held the company together and ran it with an Iron Fist. Remember, when Commodore got rid of Jack, he scooped up a dying company named Atari and brought it back to life just to get even with the company that he started and grew but bit him in the ass. If he were more serious about it, the Atari ST would have a contender against the Amiga. He had already beaten Mac with the ST - the first under $500 and then later $300 machine that can do nearly do everything a Mac can do.

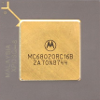

In short, if Jack Tremiel would have stayed with Commodore, it would have multi-layered boards like the Mac and Atari ST. But Commodore had to go cheap in order to survive the 1990s, and without Tremiel. The Amiga and the ST had sound and graphics chips the Mac did not. They both had memory foot print space that the Mac wished it had. Both the Amiga and ST at the time could emulate a Mac hands down while running their own software at the same time at half the price of a Mac! The Mac could not do that at double the price of itself!

So - better sound, better graphics, faster I/O, bigger memory foot print size, on the same CPU at the same speed without crippling it, at half the price, which is the better machine?

Again, if Jack Tremiel would have stayed with Commodore, that A3000 board would be 75% smaller with multi-layered boards. He was Jobs with a better understanding of the technology. But he was not there at the time the A3000 came out, and Commodore was a dying company by then so they had to build cheap and save money on eliminating R&D. With that in mind, now think, which one is "Better".

There is no better unless you want to contrast and compare. But compare what? Apple is better because ______. Commodore is better because _______. Atari is better because ________. Until you can fill in the blanks, this just talk, and talk is cheap.