OK, so I've had a glass of wine, but here's the dumb idea. The MacSE has a PDS slot (page 445-446 of the Guide To The Macintosh Family Hardware), which explicitly says it can be used for memory expansion. So, we decode another 3MB from $600000 to $8FFFFF and another 1MB from $C00000 to $CFFFFF; then write an INIT which modifies the System Heap so that the top of RAM is $CFFFFF, with $400000 to $5FFFFF and $900000 to $BFFFFF pre-allocated as non-purgeable. So, in this case if each memory block begins with a header, that means that the header will be stored just below $400000 and just below $900000 or if it involves setting system Master Pointer then the appropriate adjustments are made.

It would mean that a Mac SE could use up to 8MB of RAM even though no more than about 3MB (in System 7) could be allocated to any particular application. But since a 4MB Mac SE under System 7 only has about 3MB free anyway, it'd still be about twice as useful .

.

I'm sort-of aware this has probably been proposed a gazillion times ;-) !

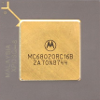

SE Memory map:

It would mean that a Mac SE could use up to 8MB of RAM even though no more than about 3MB (in System 7) could be allocated to any particular application. But since a 4MB Mac SE under System 7 only has about 3MB free anyway, it'd still be about twice as useful

I'm sort-of aware this has probably been proposed a gazillion times ;-) !

SE Memory map: