cheesestraws

Well-known member

Since logistics currently requires software projects, I've been having a go at building a VNC server for A/UX 3, both inspired by and based on @marciot 's MiniVNC. Although this started off as a straight fork, in fact, most of it has now been rewritten because of the very different problems that VNC on A/UX faces (and because I'm better at C than C++, but ssssh). It isn't "finished" yet, but I thought people might be interested in how far it's come. This has been a journey of swearing and reverse-engineering and has been rather enjoyable so far.

Here's a video to hook you in. This is running on real hardware, an overclocked Q650. You can see that even with its current rather basic incremental update support, it's already pretty responsive, and the computer is totally usable over it (well, totally usable except for only half the keyboard working so far, etc, etc). Note also that the VNC session is maintained across user sessions, and it manages the change in colour configuration without going funny.

View attachment vnc-rec.mov

The code as it stands is at https://github.com/cheesestraws/aux-minivnc. Part of it is a kernel module to provide access to the framebuffer and input devices from outside the Mac environment. This is probably the first non-trivial A/UX kernel module written in some considerable time (though SolraBizna has beat me to the first at all). There's then a userspace daemon which talks the VNC protocol and manages the remote framebuffer and wrangles input.

Why the big differences between this and MiniVNC for the Mac?

Here's a video to hook you in. This is running on real hardware, an overclocked Q650. You can see that even with its current rather basic incremental update support, it's already pretty responsive, and the computer is totally usable over it (well, totally usable except for only half the keyboard working so far, etc, etc). Note also that the VNC session is maintained across user sessions, and it manages the change in colour configuration without going funny.

View attachment vnc-rec.mov

The code as it stands is at https://github.com/cheesestraws/aux-minivnc. Part of it is a kernel module to provide access to the framebuffer and input devices from outside the Mac environment. This is probably the first non-trivial A/UX kernel module written in some considerable time (though SolraBizna has beat me to the first at all). There's then a userspace daemon which talks the VNC protocol and manages the remote framebuffer and wrangles input.

Why the big differences between this and MiniVNC for the Mac?

- Easier: We don't have to deal with MacTCP, we can just use standard UNIX sockets. It's much faster, and this means we need to worry less about how much we're sending and how we're scheduling that sending. So, at the moment, we are using raw encoding rather than TRLE and it's already responsive. This would preclude running over MacIP, but A/UX doesn't support MacIP anyway, so this isn't a problem.

- Easier: we've just got more CPU and memory to play with. We're not trying to deal with 68000s, we're not dealing with Mac Pluses.

- Harder: we need to deal with more complicated situations where display parameters change under us, for example between sessions. Some of those sessions aren't even mac-like: the console emulator session type, which just gives a big full screen terminal, is only pretending to be mac-like, nothing of the Mac OS is actually running.

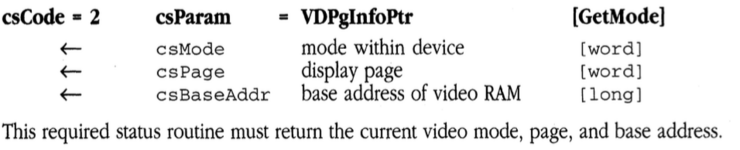

- Harder: there's no central place to get the information we need. Under Mac OS, we can just ask QuickDraw for all the details about screens and so forth. On A/UX, we can't. QuickDraw lives inside the Mac world and if we want to survive across multiple sessions, including terminal sessions, we can't use it. So we need to ferret out information from corners of the kernel, talk to the kernel's cradle around the slot manager and video drivers, and so forth.

- Harder: we can't talk to the hardware directly. Getting access to things like framebuffers can only be done via custom code in the kernel (I hoped to use the user interface driver for this, but we can't). So we live in a split world where there is a kernel module and a userspace component, and at the moment we have to copy the framebuffer out of kernel space, rather than consulting it directly. This adds up to 90ms of latency to updating the framebuffer. There is a way around this, but it requires me to go and do some more disassembly of the kernel...